Dataset too large to import. How can I import certain amount of rows every x hours? - Question & Answer - QuickSight Community

By A Mystery Man Writer

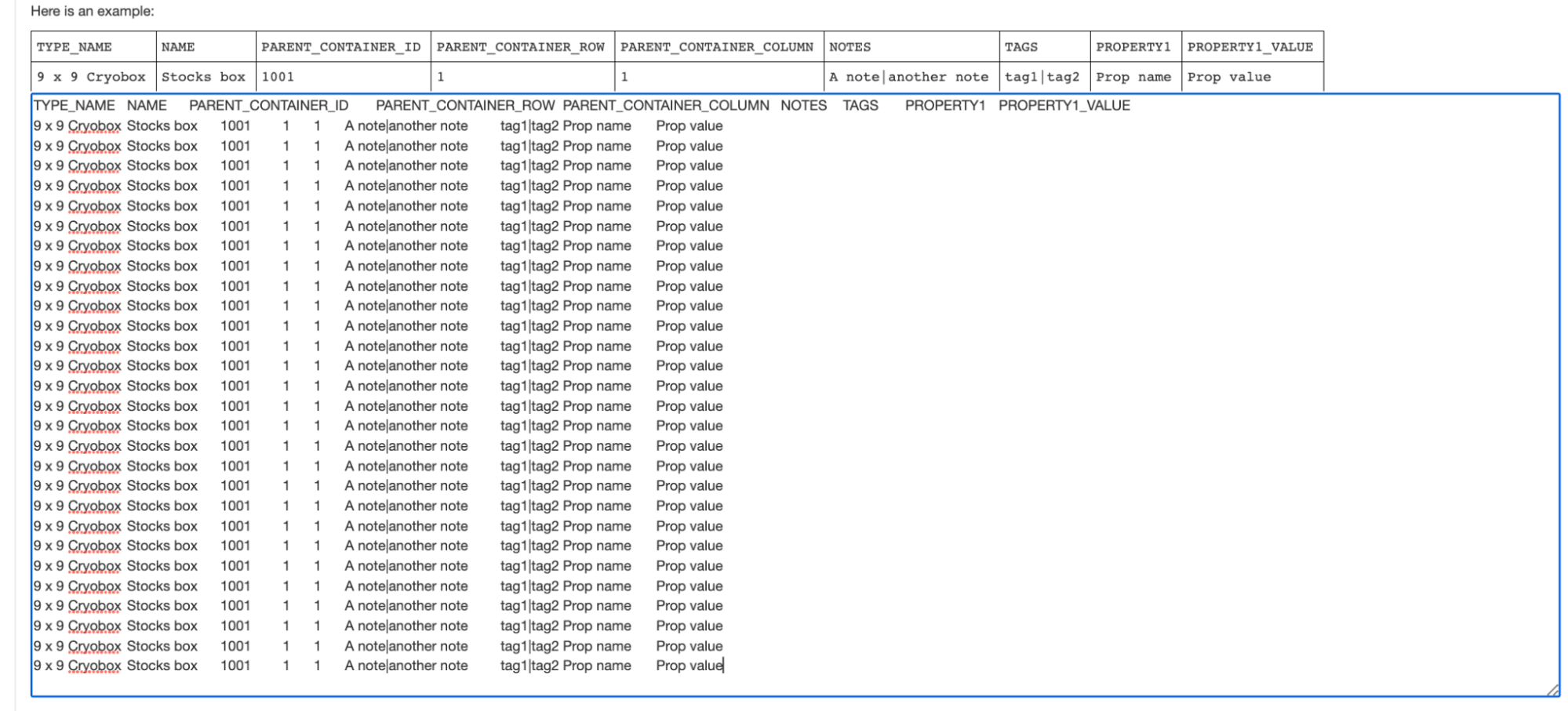

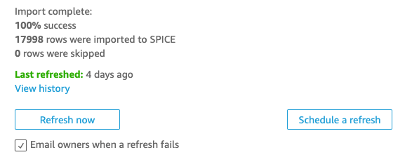

Im trying to load data from a redshift cluster but the import fails because the dataset is too large to be imported using SPICE. (Figure 1) How can I import…for example…300k rows every hour so that I can slowly build up the dataset to the full dataset? Maybe doing an incremental refresh is the solution? The problem is I don’t understand what the “Window size” configuration means. Do i put 300000 in this field (Figure 2)?

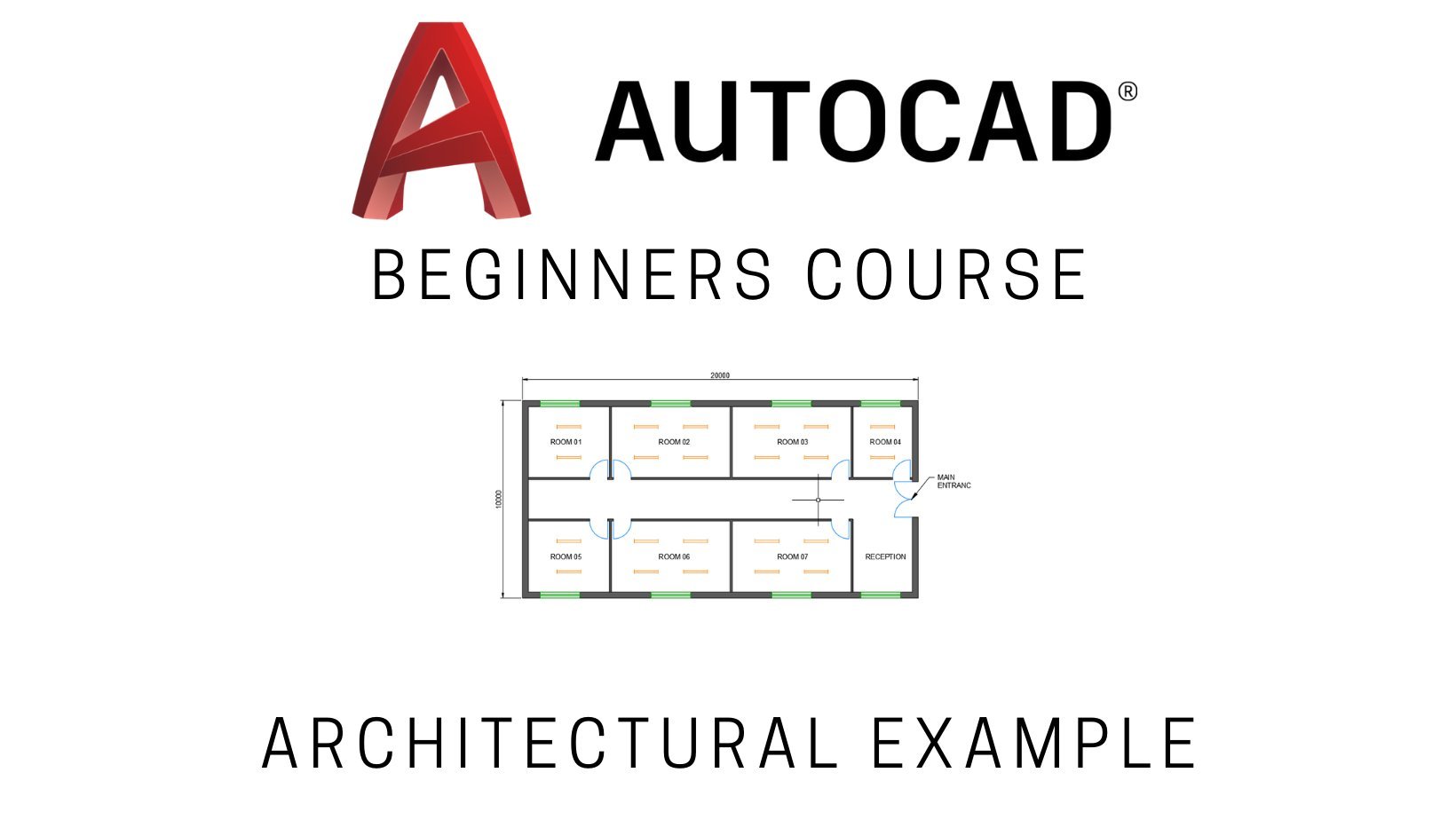

AutoCAD 2021 Beginners Course - Zero to Hero Fast with AutoCAD, Michael Freeman

Quicksight: Deep Dive –

AWS Quicksight vs. Tableau -Which is The Best BI Tool For You?

75 Free, Open Source and Top Reporting Software in 2024 - Reviews, Features, Pricing, Comparison - PAT RESEARCH: B2B Reviews, Buying Guides & Best Practices

Page 5 – Ginkgo Bioworks

Error with filters - Question & Answer - QuickSight Community

Solved: Delete all the row when there is null in one colum - Microsoft Fabric Community

Easy Analytics on AWS with Redshift, QuickSight, and Machine Learning, AWS Public Sector Summit 2016

Import fails using SPICE because dataset is too large. How can I limit dataset size and then incrementally import rows every hour? - Question & Answer - QuickSight Community

QuickSight Now Generally Available – Fast & Easy to Use Business Analytics for Big Data

PDF) Big Data Analytics Empowered with Artificial Intelligence

Newsroom - SouthernCaliforniaChapter

Data Preparation Using Quicksight to Deliver Business Solutions

Easy Analytics on AWS with Redshift, QuickSight, and Machine Learning, AWS Public Sector Summit 2016