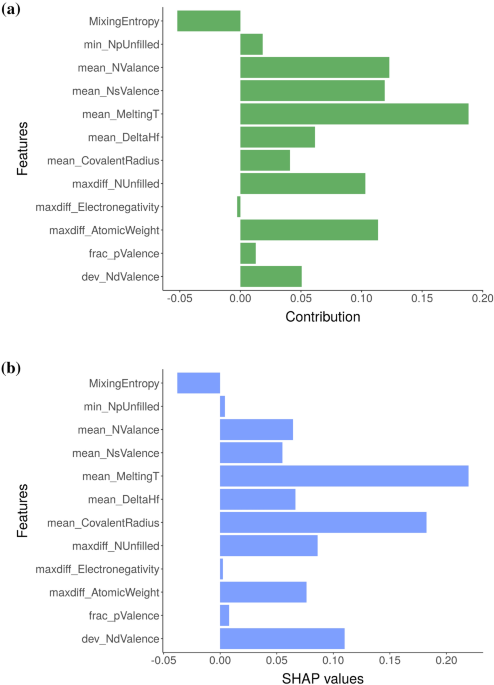

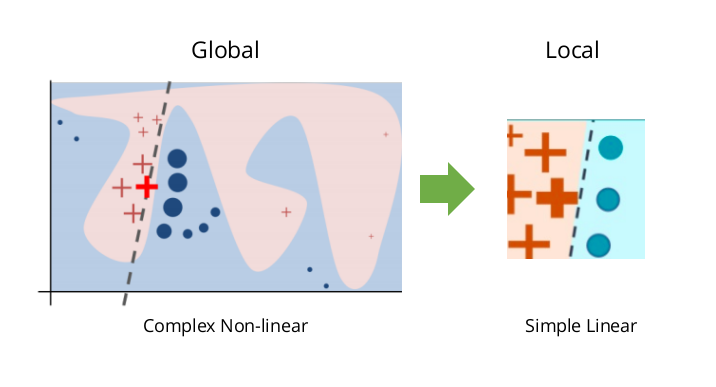

Feature importance based on SHAP-values. On the left side, the

By A Mystery Man Writer

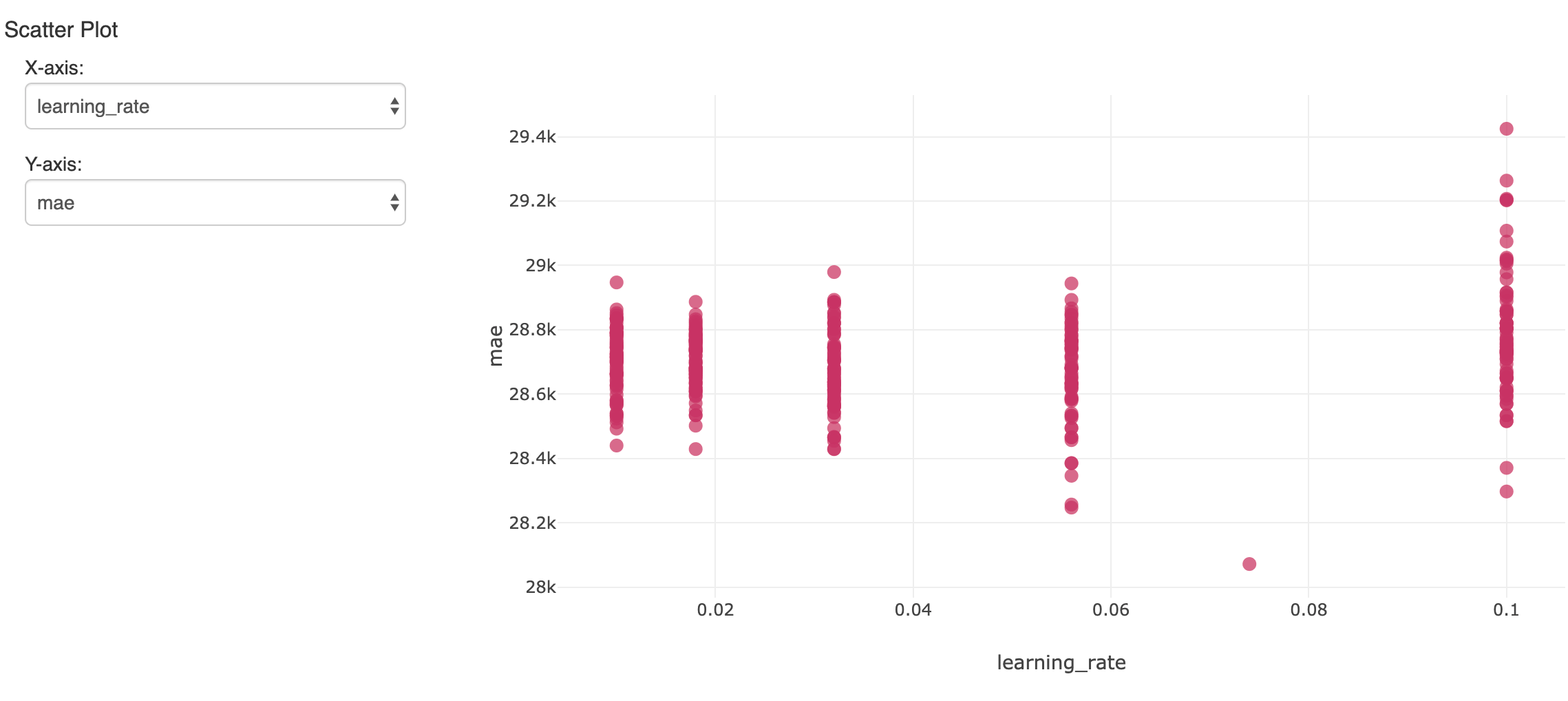

Using SHAP with Machine Learning Models to Detect Data Bias

Jan BOURGOIS, Full Professor, PhD

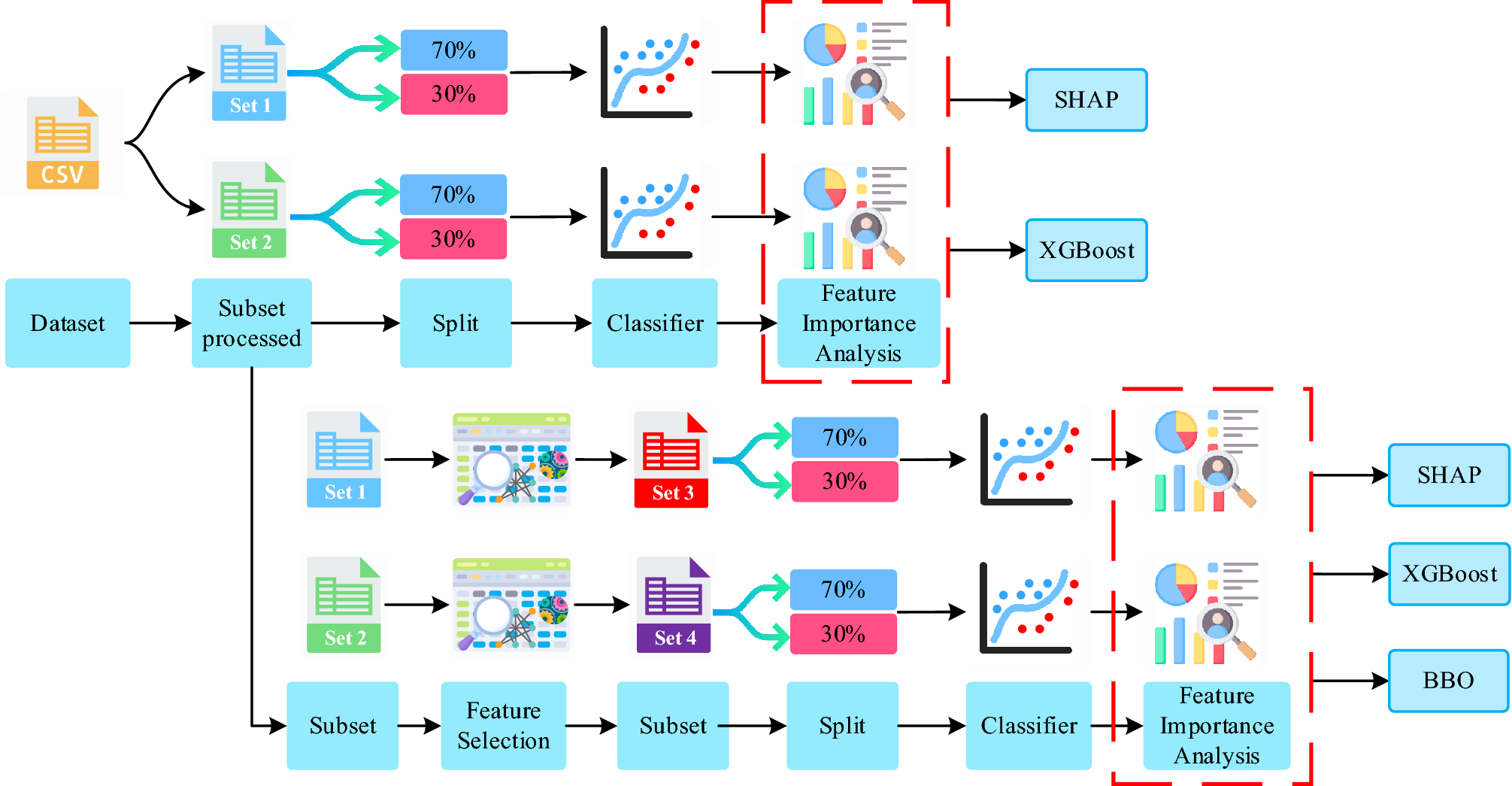

Detection of the chronic kidney disease using XGBoost classifier and explaining the influence of the attributes on the model using SHAP

Interpretability part 3: opening the black box with LIME and SHAP - KDnuggets

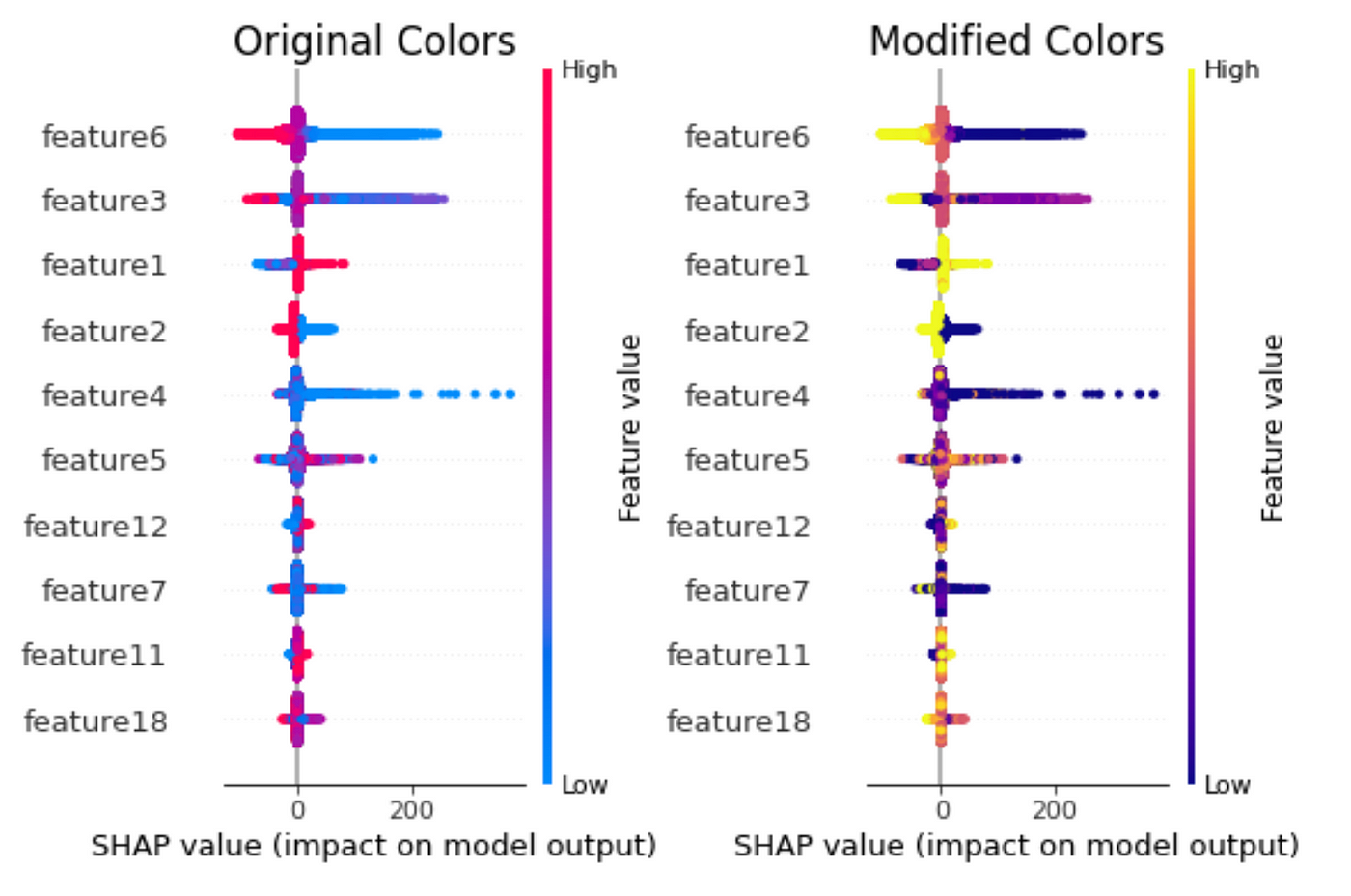

python - Machine Learning Feature Importance Method Disagreement (SHAP) - Cross Validated

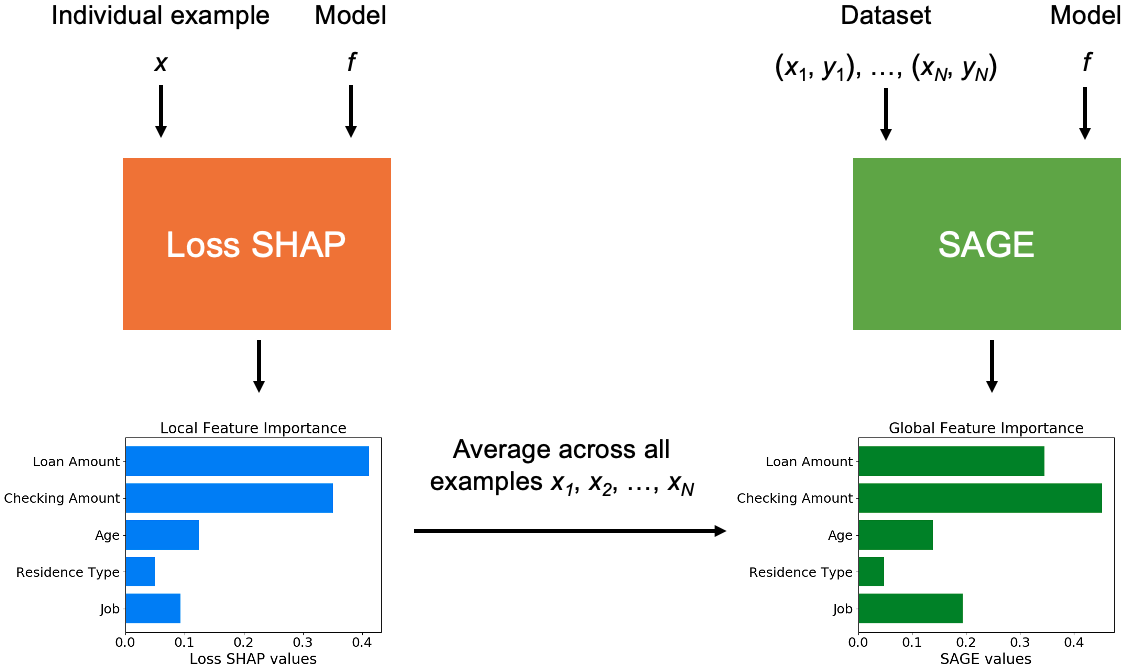

Explaining ML models with SHAP and SAGE

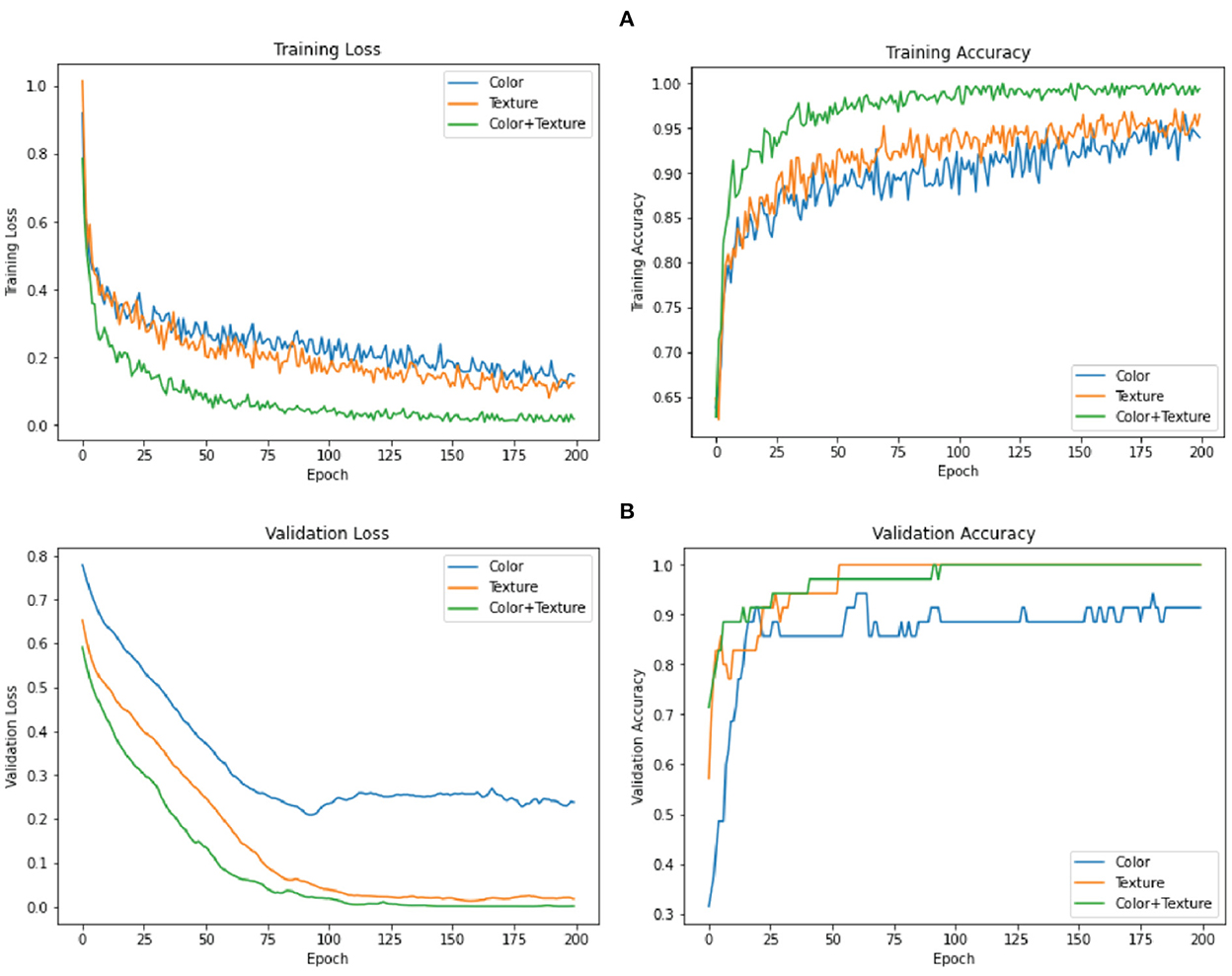

Frontiers Adulteration detection in minced beef using low-cost

The Shapley (SHAP) values and feature importance of the 1st-level

Feature importance based on SHAP-values. On the left side, the

PDF) Adulteration detection in minced beef using low-cost color

Explainable AI (XAI) with SHAP -Multi-Class Classification Problem, by Idit Cohen

Data analysis with Shapley values for automatic subject selection in Alzheimer's disease data sets using interpretable machine learning, Alzheimer's Research & Therapy

Jan BOURGOIS, Full Professor, PhD

Feature importance and SHAP value of features in machine learning

- Thick Athletics Apparel - @sydneyjyfitness rocking her Royal Blue

- Jordan Jumpman By Nike Girls Leggings- Basketball Store

- You guys!! EBY is having a BOGO sale!! - Woodland & Willow

- IcebreakerMerino 260 Vertex Leggings - Herenga - Womens

- Sports Bras for Women Women's One-Piece Bra Everyday Underwear Strapless Polishing Bra Bandeau Sexy Bra Built in Bra Tank Tops for Women