Wednesday, Oct 02 2024

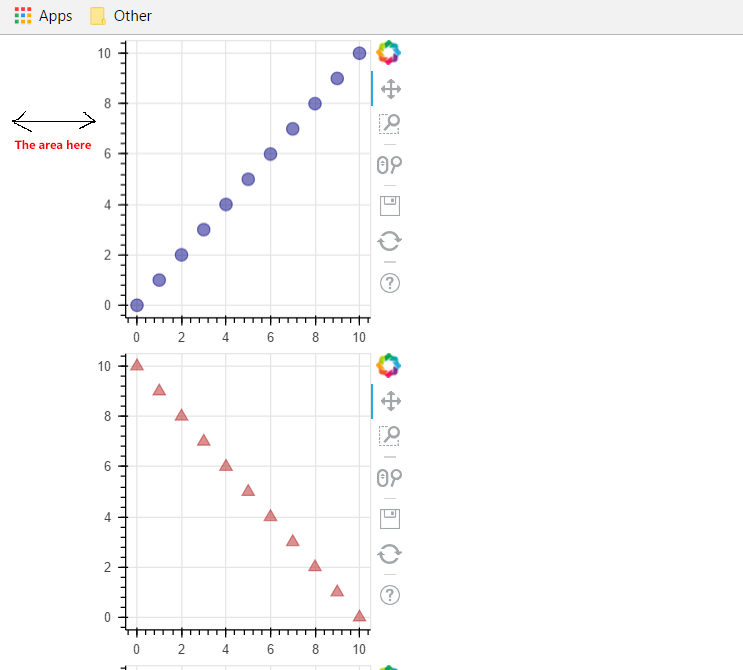

Variable-Length Sequences in TensorFlow Part 1: Optimizing

By A Mystery Man Writer

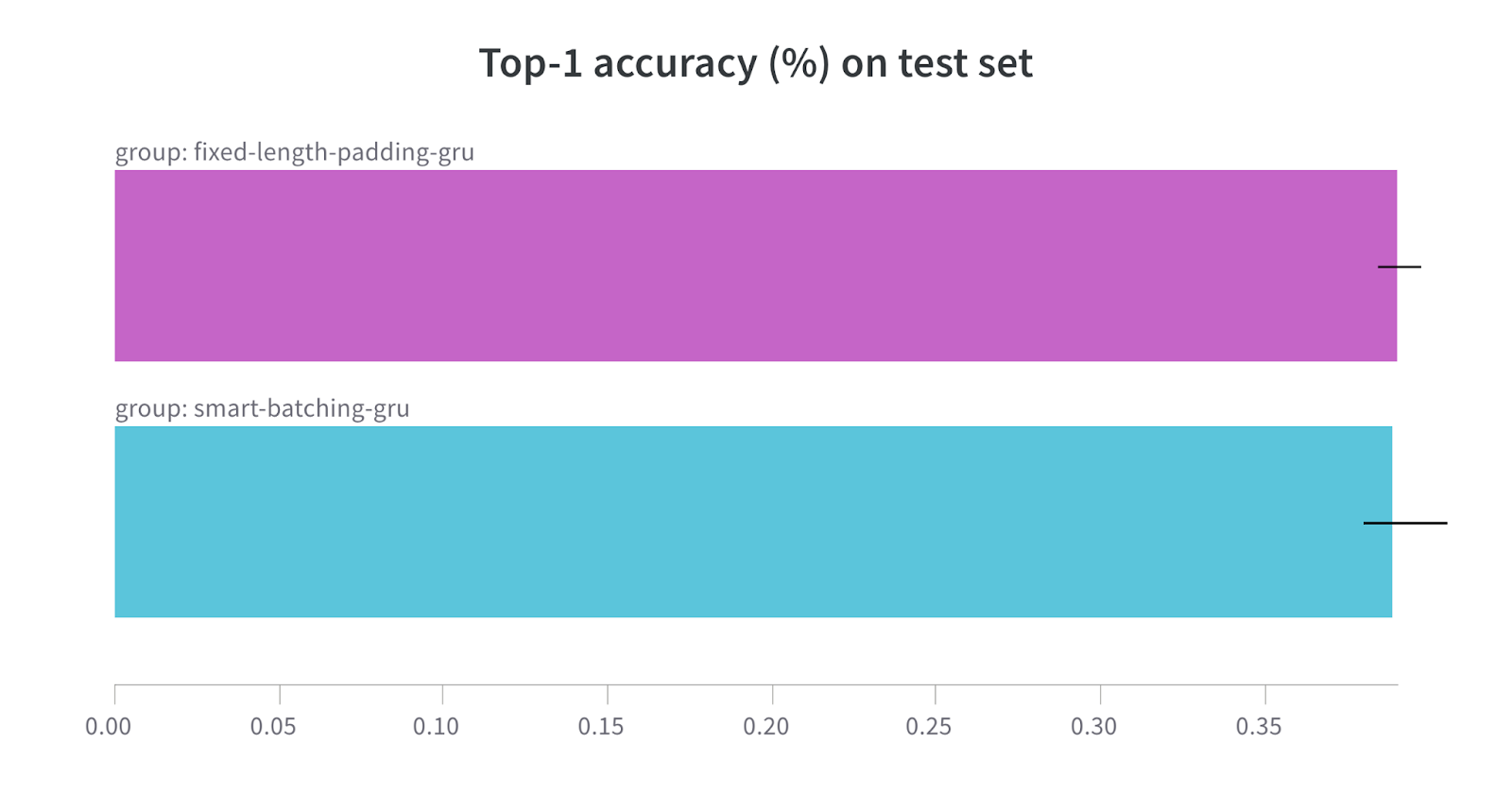

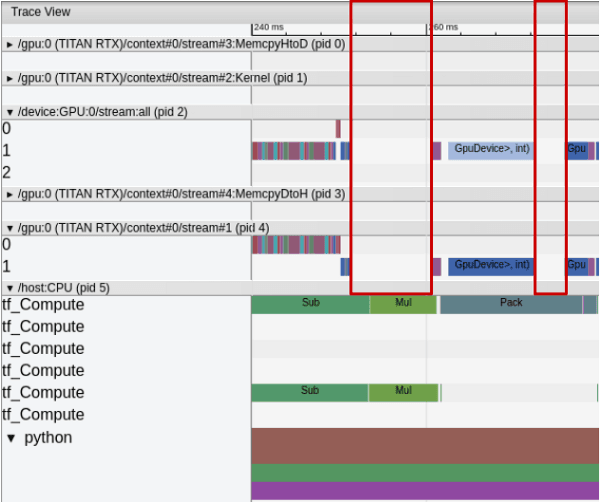

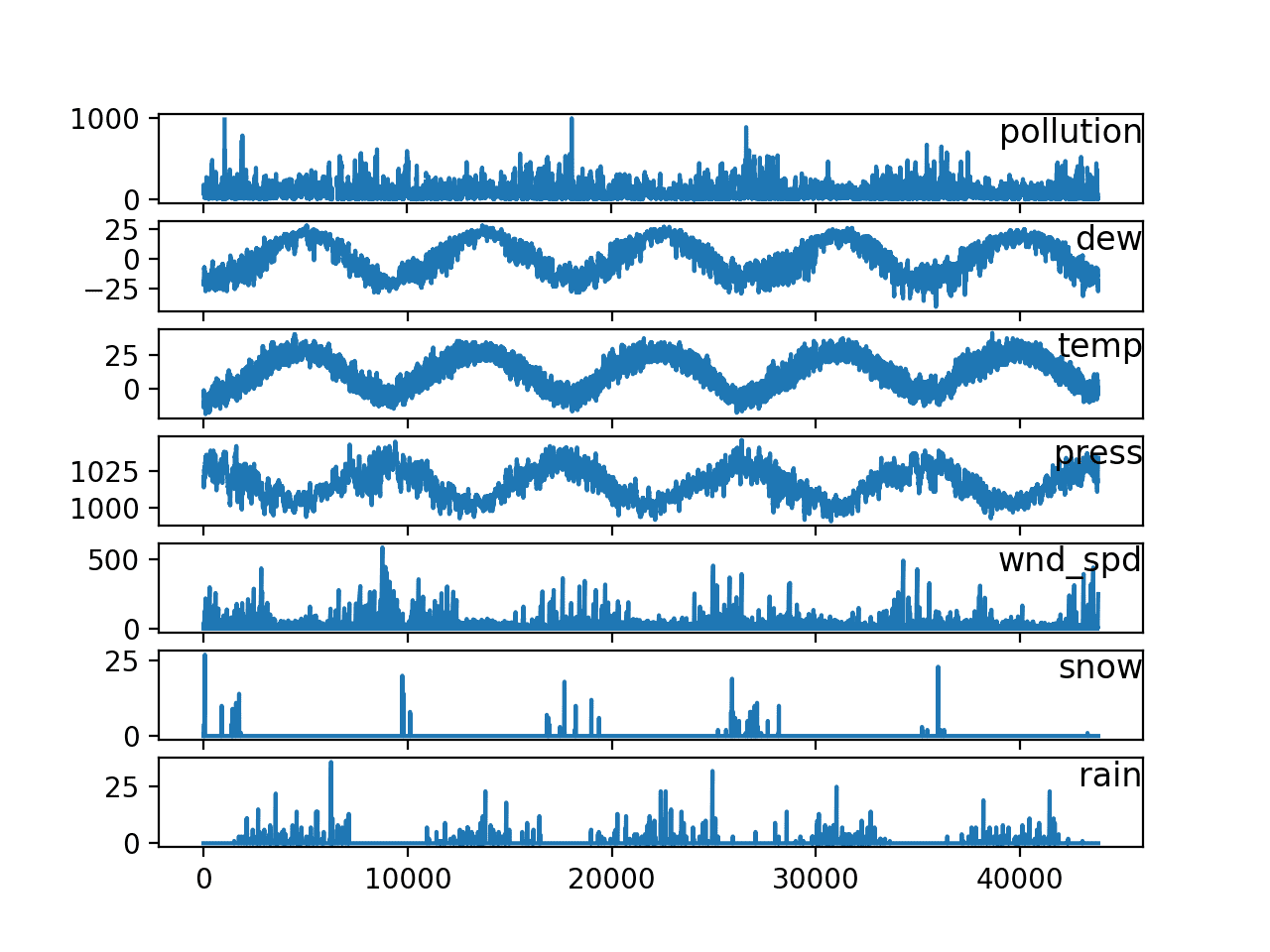

We analyze the impact of sequence padding techniques on model training time for variable-length text data.

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

TensorFlow 2.0 Tutorial: Optimizing Training Time Performance - KDnuggets

tensorflow/RELEASE.md at master · tensorflow/tensorflow · GitHub

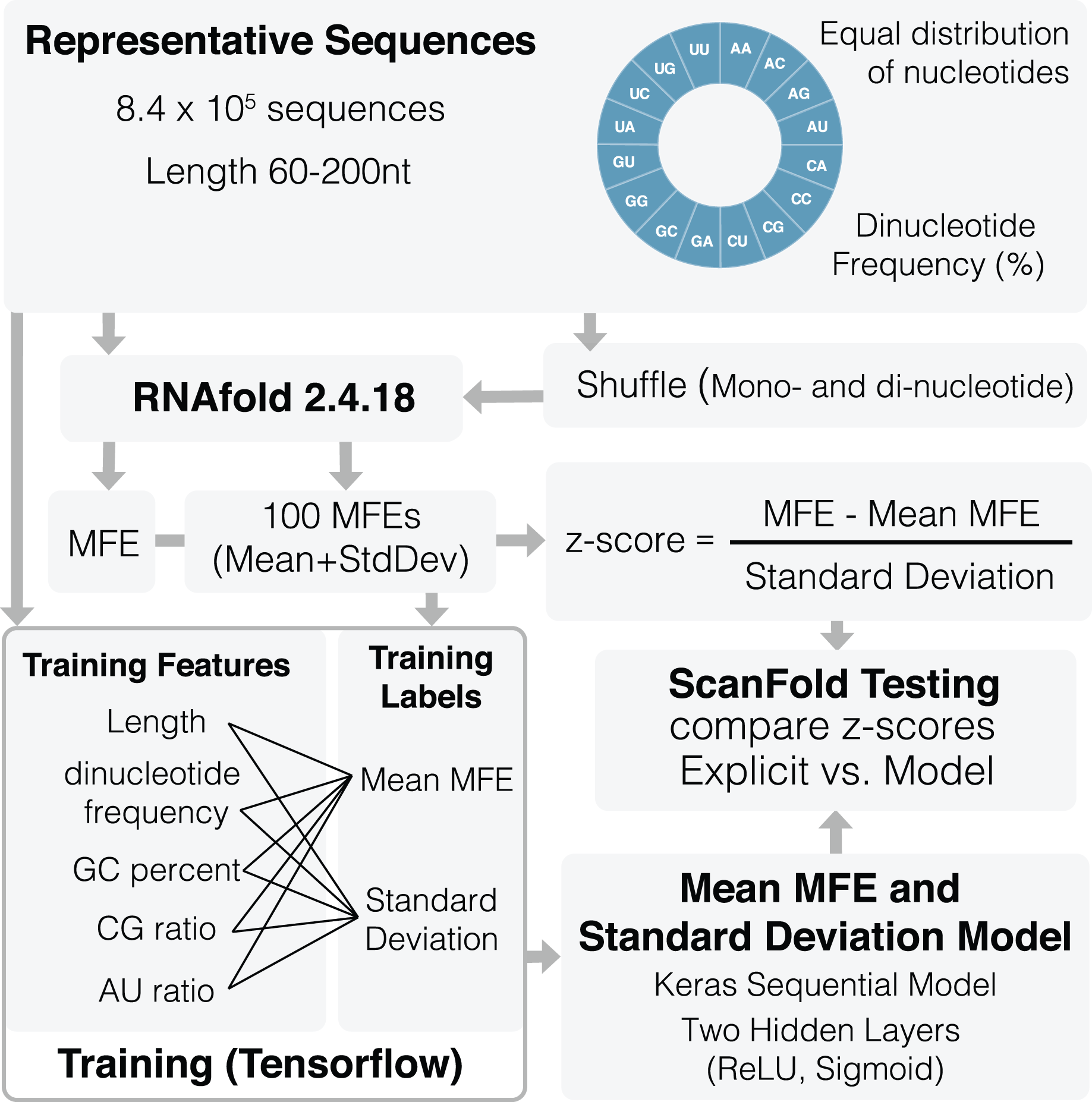

ScanFold 2.0: a rapid approach for identifying potential structured RNA targets in genomes and transcriptomes [PeerJ]

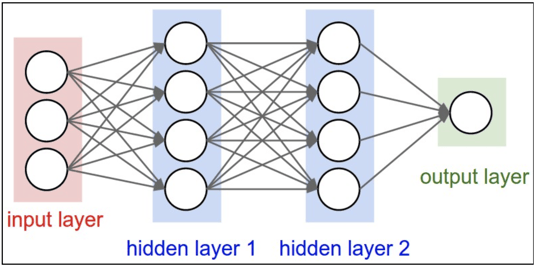

Image Classification with TensorFlow

Sequence-to-function deep learning frameworks for engineered riboregulators

DROP THE STRINGS PADDING ベスト

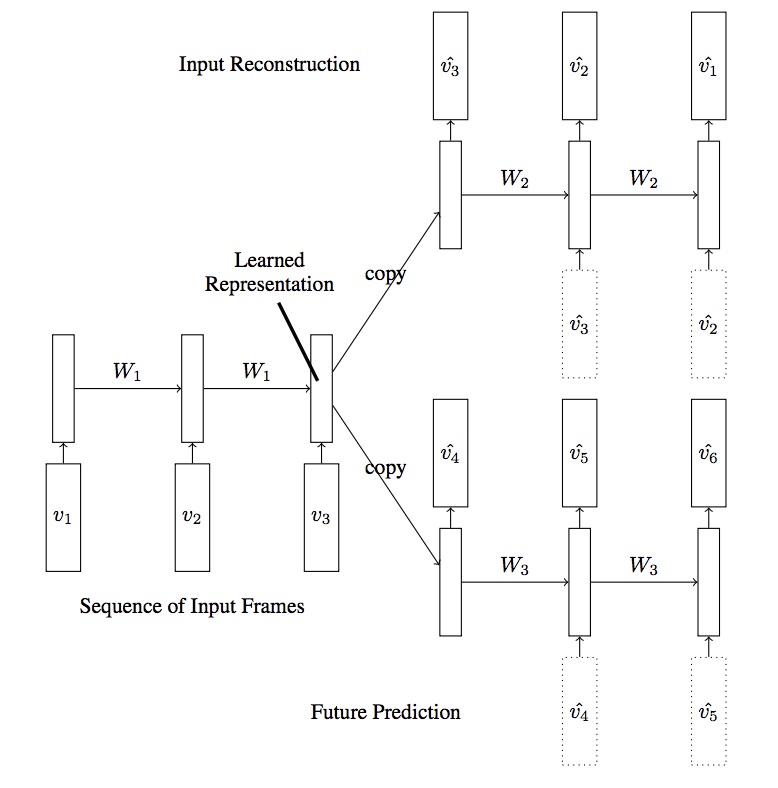

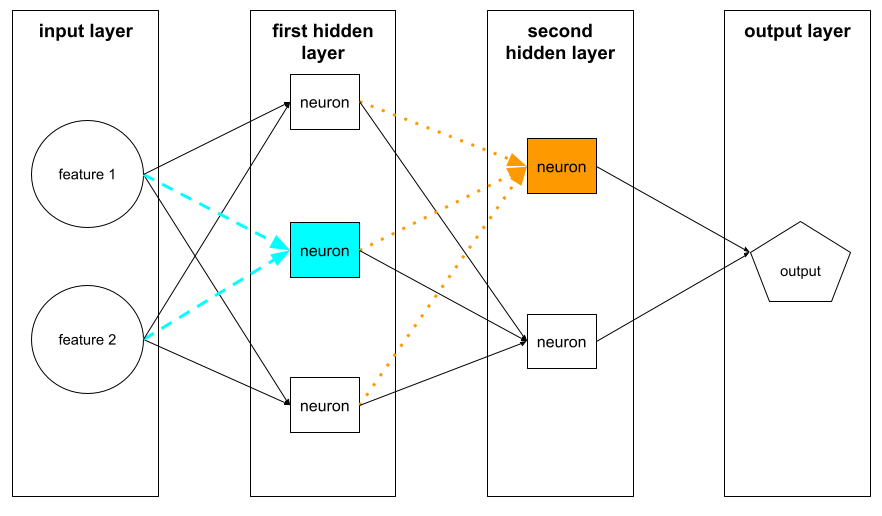

A Gentle Introduction to LSTM Autoencoders

Sensors, Free Full-Text

/static/machine-learning/glos

/wp-content/uploads/2017

Related searches

- Drawer Body padding is fixed value - should be variable · Issue #14862 · ant-design/ant-design · GitHub

- Margin vs Padding. When designing a website two of the CSS…, by Isaac Chavez

- Removing padding or margins from Bokeh Figures - Community Support - Bokeh Discourse

- Found the SF Pro Rounded font with fixed padding to whoever needs it : r/oneui

- Tutorial] How to Make Your Squarespace Header Fixed or Sticky

Related searches

- FREE SHIPPING Salmon Pink High Waist Straight Leg Pants Lyocell

- Pampers Easy Ups Training Pants Boys 2T-3T (16-34 lbs), 25 count

- Low Rise Elastic Tight Curled Women's Blue Denim Shorts Summer New Hot Girl Street Casual Female Hip Wrap Sexy Mini Shorts - AliExpress

- Lounge Underwear Emilia Floral-embroidered Two-piece Lace Set in Blue

- Buy NYKD Women's Cotton Lightly Padded Wire Free Everyday T-Shirt Bra for Women Daily Use Wireless, 3/4th Coverage Online at Best Prices in India - JioMart.

)

©2016-2024, doctommy.com, Inc. or its affiliates