Using LangSmith to Support Fine-tuning

By A Mystery Man Writer

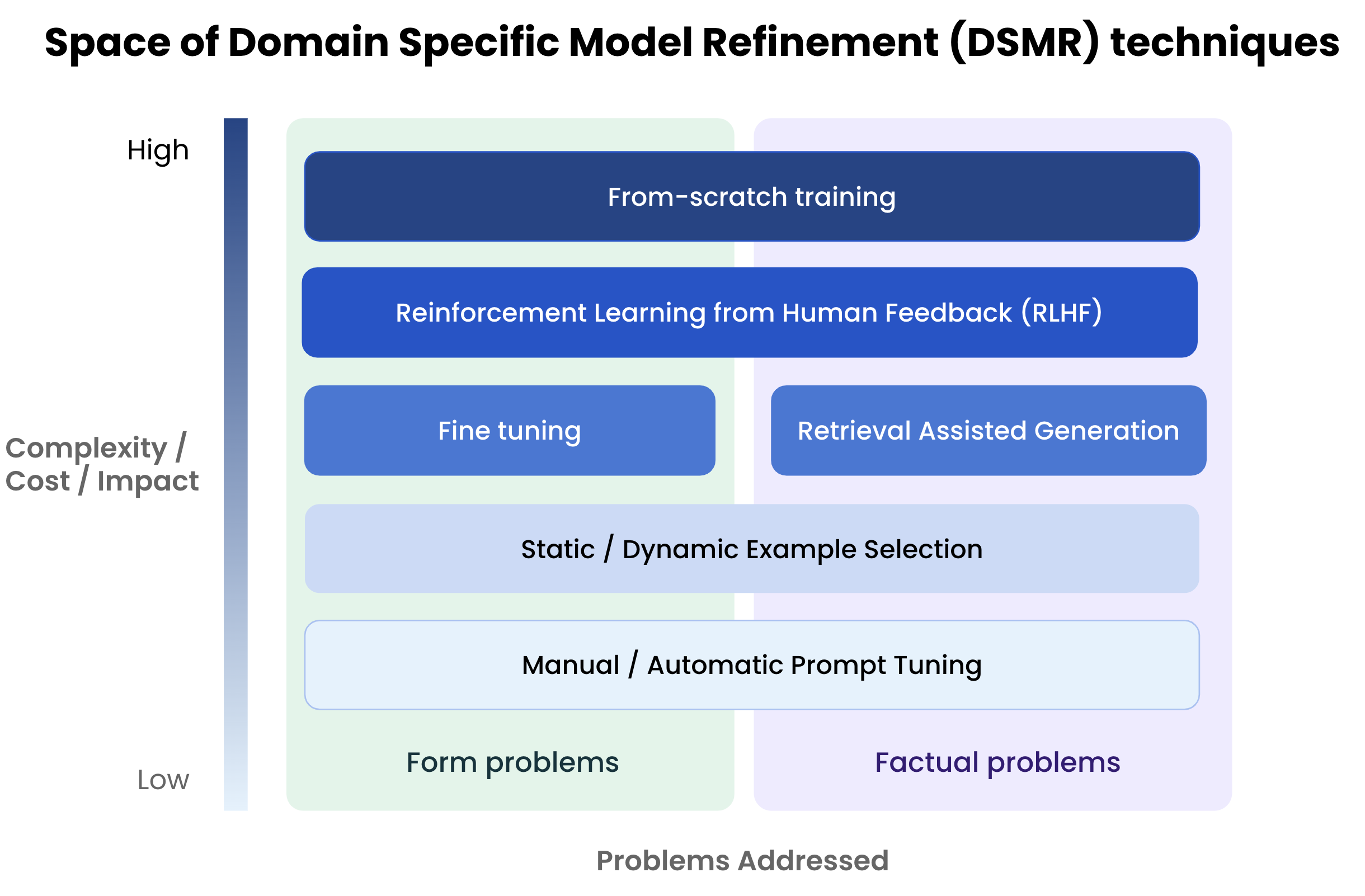

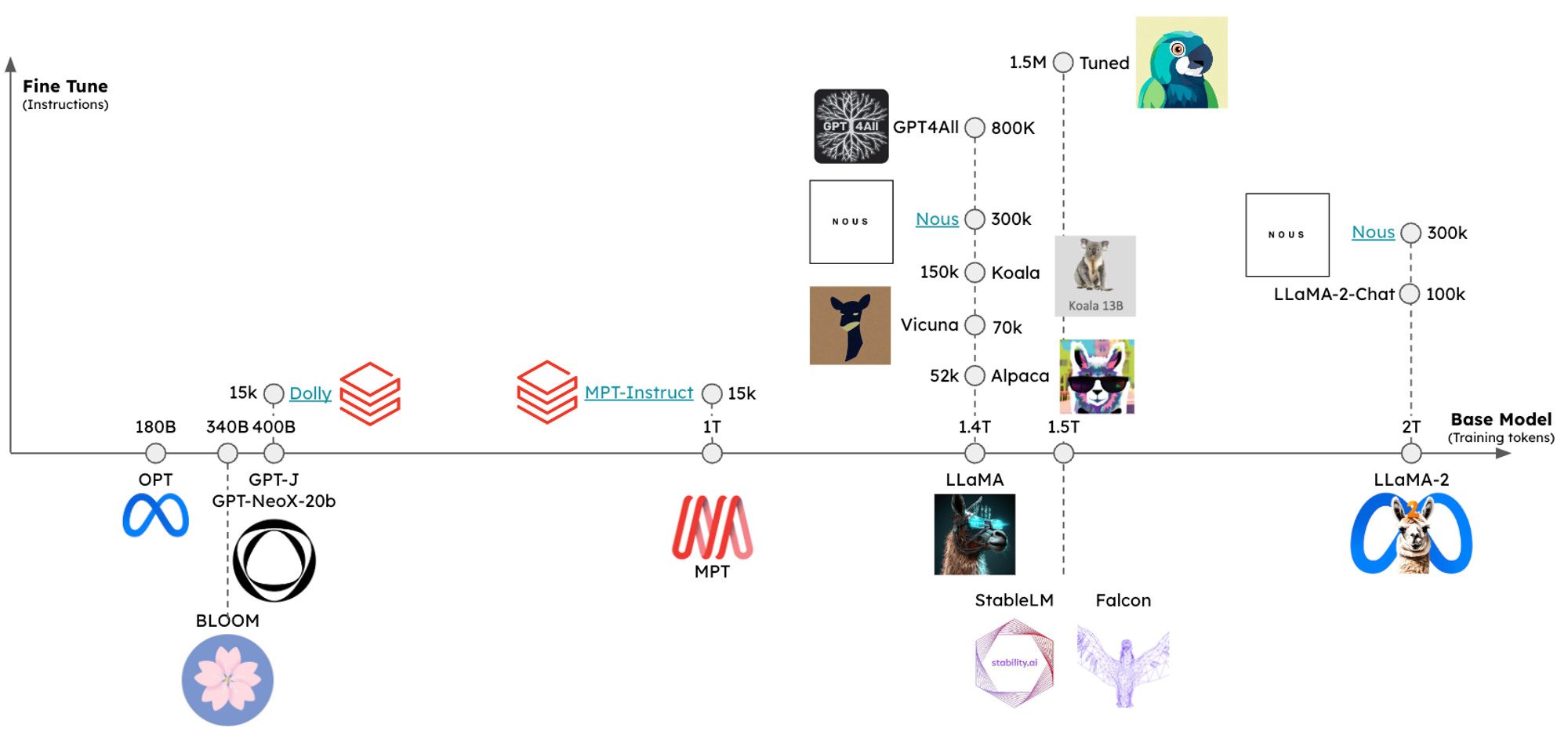

Summary We created a guide for fine-tuning and evaluating LLMs using LangSmith for dataset management and evaluation. We did this both with an open source LLM on CoLab and HuggingFace for model training, as well as OpenAI's new finetuning service. As a test case, we fine-tuned LLaMA2-7b-chat and gpt-3.5-turbo for an extraction task (knowledge graph triple extraction) using training data exported from LangSmith and also evaluated the results using LangSmith. The CoLab guide is here. Context I

Nicolas A. Duerr on LinkedIn: #innovation #ai #artificialintelligence #business

Multi-Vector Retriever for RAG on tables, text, and images 和訳|p

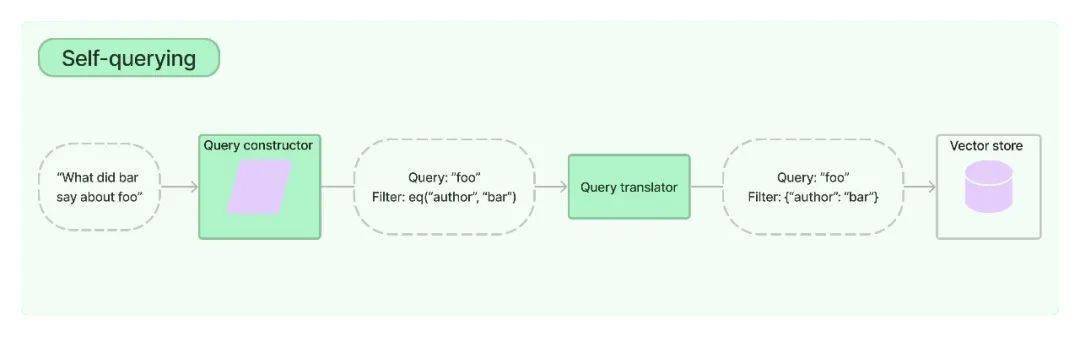

Query Construction (LangChain Blog) - nikkie-memos

Thread by @RLanceMartin on Thread Reader App – Thread Reader App

Thread by @LangChainAI on Thread Reader App – Thread Reader App

Nicolas A. Duerr on LinkedIn: #success #strategy #product #validation

🧩DemoGPT (@demo_gpt) / X

如何借助LLMs构建支持文本、表格、图片的知识库_手机搜狐网

Thread by @RLanceMartin on Thread Reader App – Thread Reader App

Nicolas A. Duerr on LinkedIn: #success #strategy #product #validation

- NWT Vanity Fair Bra 36C Body Sleeks Support Full Coverage IVORY Underwire NEW - Helia Beer Co

- NIKE Los Angeles Lakers NBA Pants Pre-Game Shooting Warm-Up Blue M

- Lingerie And Girdles China Trade,Buy China Direct From Lingerie And Girdles Factories at

- lululemon athletica, Pants & Jumpsuits, Lulu Lemon Leggings

- Avidlove Swimsuit Coverups for Women Sexy Bikini Coverup Chiffon