Two-Faced AI Language Models Learn to Hide Deception

By A Mystery Man Writer

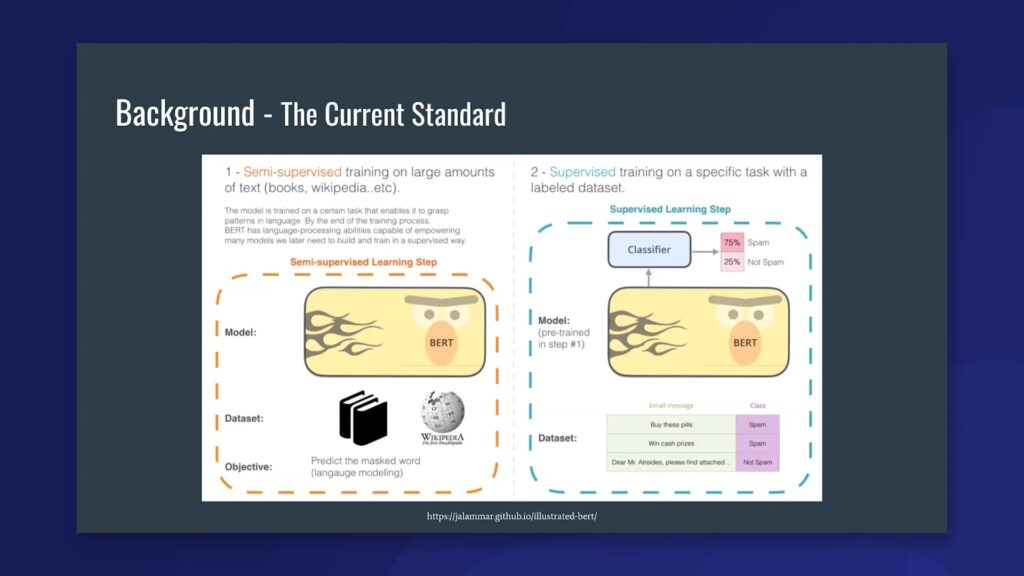

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

Algorithms and Terrorism: The Malicious Use of Artificial Intelligence for Terrorist Purposes. by UNICRI Publications - Issuu

Evan Hubinger (@EvanHub) / X

Differences between two classification approaches of sentiment

Neural Profit Engines

This new tool could protect your pictures from AI manipulation

How A.I. Conquered Poker - The New York Times

Artificial Intelligence on the Battlefield: Implications for Deterrence and Surprise > National Defense University Press > News Article View

Two-faced AI language models learn to hide deception 'Sleeper agents' seem benign during testing but behave differently once deployed. And methods to stop them aren't working. : r/ChangingAmerica

pol/ - A.i. is scary honestly and extremely racist. This - Politically Incorrect - 4chan

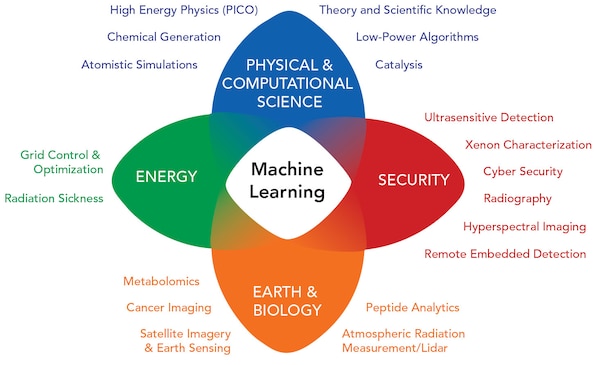

Prompting methods with language models and their applications to weak supervision

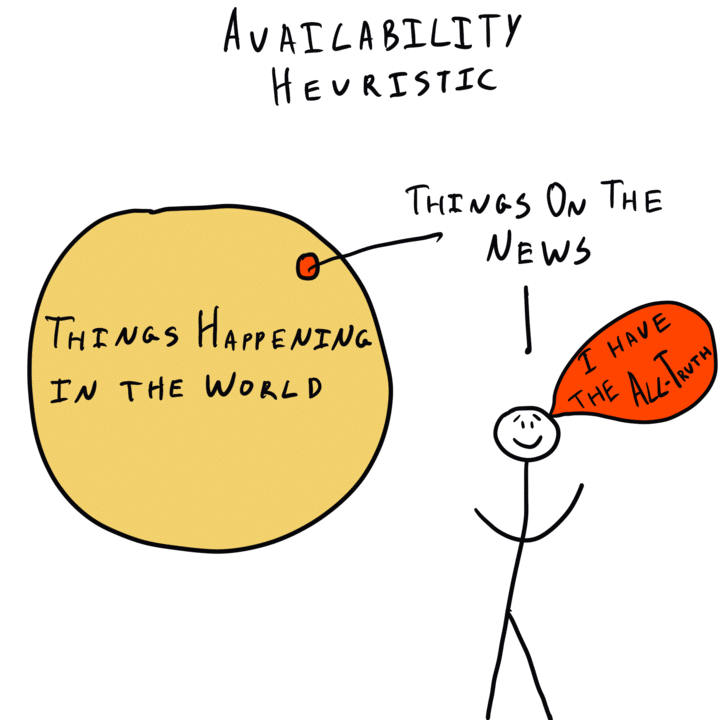

Availability Heuristic - The Decision Lab

- Hunting Shirt, Hunting Shirts for Women, Womens Hunting Shirts

- 14K Gold Diamond Drop Belly Chain – Baby Gold

- DELIMIRA Women's Front Closure Posture Wireless Back Support Full

- Candy Pink Sweater with Sequined and Beaded Flowers circa 1960s – Dorothea's Closet Vintage

- Anita Rosa Faia Twin Firm Sujetador 5694 Black 95C 1ud