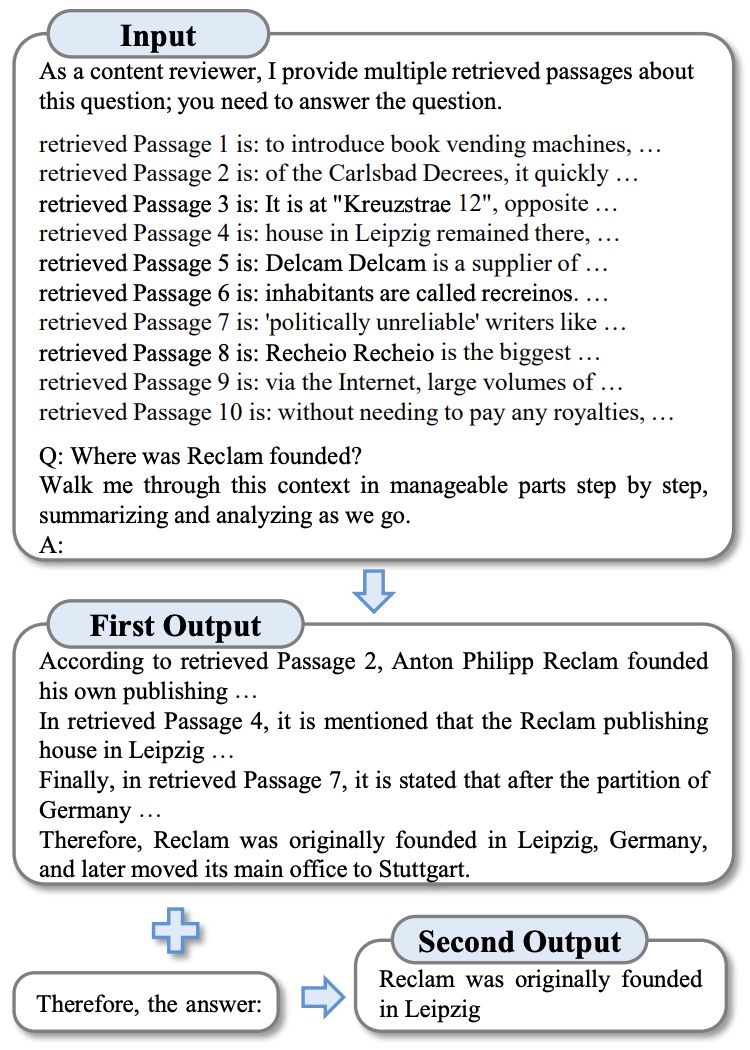

Prompt Compression: Enhancing Inference and Efficiency with LLMLingua - Goglides Dev 🌱

By A Mystery Man Writer

Let's start with a fundamental concept and then dive deep into the project: What is Prompt Tagged with promptcompression, llmlingua, rag, llamaindex.

LLMLingua: Prompt Compression makes LLM Inference Supercharged 🚀

Vinija's Notes • Primers • Prompt Engineering

LLMLingua: Innovating LLM efficiency with prompt compression - Microsoft Research

A New Era of SEO: Preparing for Google's Search Generative Experience - Goglides Dev 🌱

Goglides Dev 🌱

Reduce Latency of Azure OpenAI GPT Models through Prompt Compression Technique, by Manoranjan Rajguru, Mar, 2024

PDF] Prompt Compression and Contrastive Conditioning for Controllability and Toxicity Reduction in Language Models

LLMLingua:20X Prompt Compression for Enhanced Inference Performance, by Prasun Mishra, Jan, 2024

Goglides Dev 🌱 - Latest posts

Compression Prompts Reveal GPT's Hidden Languages

- Tummy Flatting & Butt enhancing High Waist Compression Shorts

- Body Suit Below the Knee W/Suspender Buttocks Enhancing Compression Girdle W/Zipper (BE05-BK) (XS, Black) at Women's Clothing store

- MRI of THORACOLUMBAR SPINE IMPRESSION: Moderate Pathological Compression of T11 and L2 Levels with Enhancing Multiple Marrow Stock Image - Image of backbone, diagnosis: 184876861

- Men's Ultralight Short Compression Socks (Lava) — TC Running Co

- Mens Bulge Enhancing Underwear Boxers With Push Up Cup And Enlarged Compression Shorts Men Trunk Panties From Chrysanna, $13.78

- Flower design elements elegant card Royalty Free Vector

- Balance Long Line Bra, Pink

- WOMEN'S CURVY COUTURE CONVERTIBLE ST…

- Everform - The Essential Support Maternity Leggings are the 'little black dress' of your maternity wardrobe! They have a medium support featuring built-in side pockets for everything-but-the-kitchen-sink. They are made with ultra

- Shorts para correr Favourite Velocity 3'' para mujer