HumanML3D Dataset

By A Mystery Man Writer

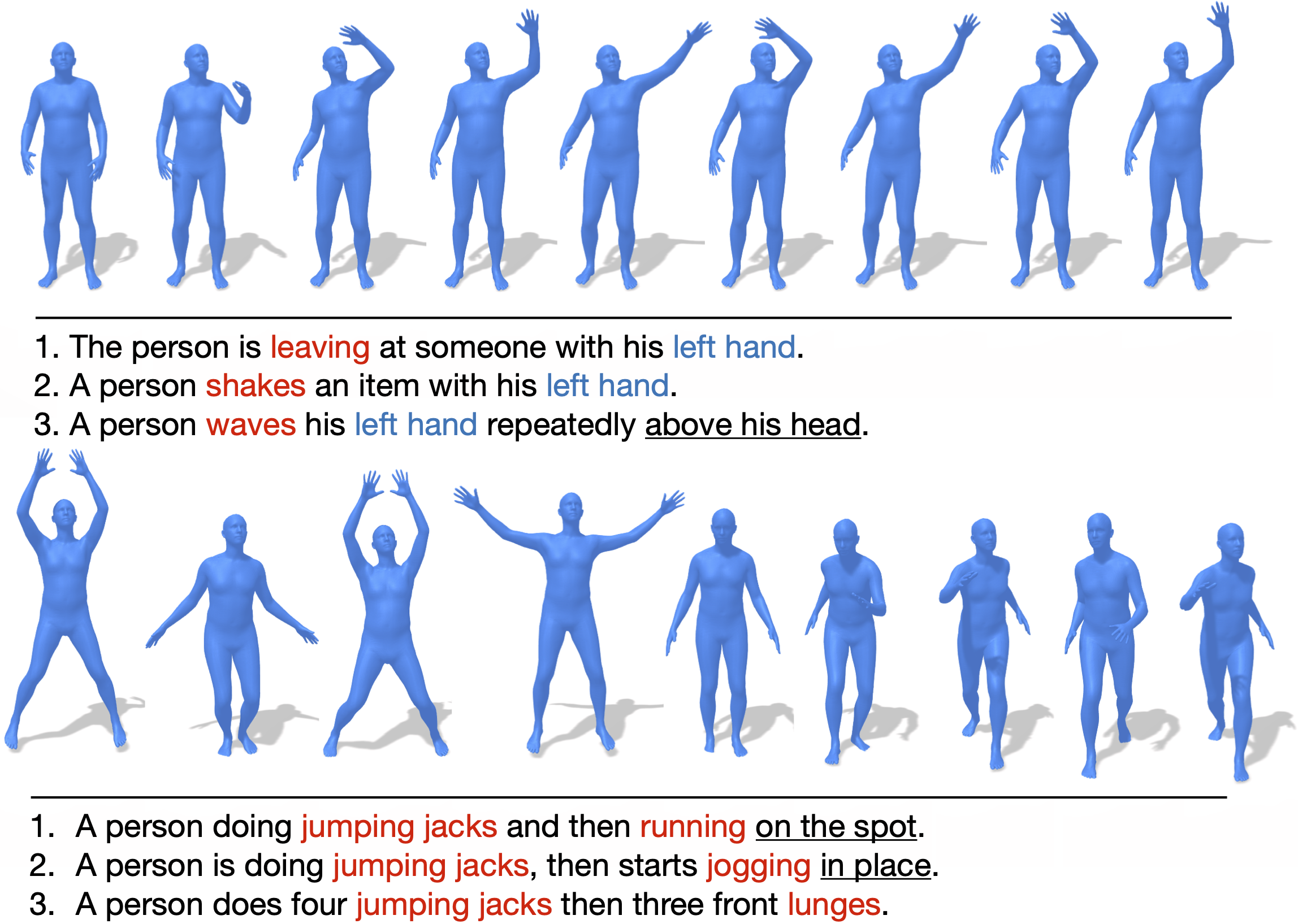

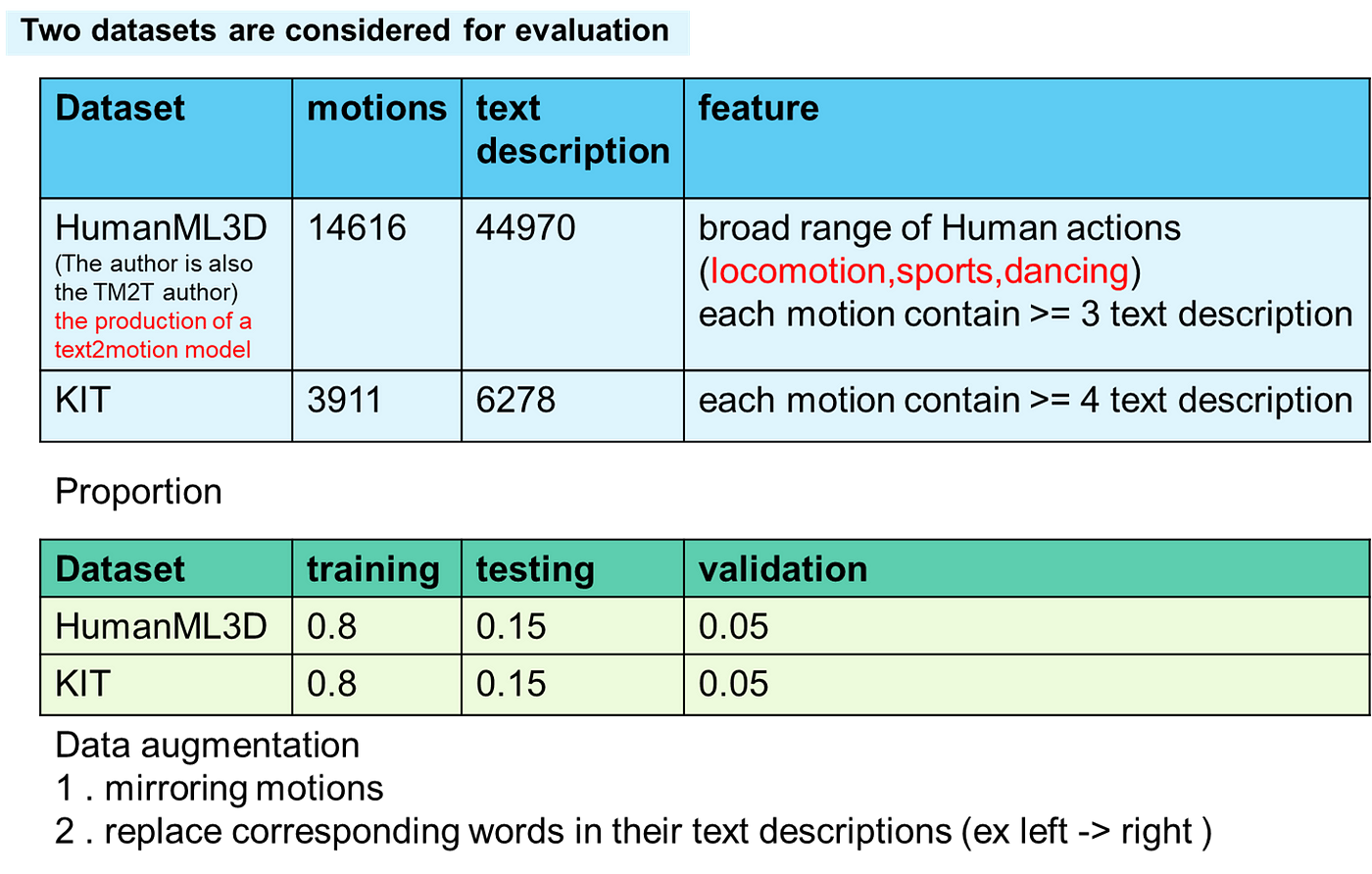

HumanML3D is a 3D human motion-language dataset that originates from a combination of HumanAct12 and Amass dataset. It covers a broad range of human actions such as daily activities (e.g., 'walking', 'jumping'), sports (e.g., 'swimming', 'playing golf'), acrobatics (e.g., 'cartwheel') and artistry (e.g., 'dancing'). Overall, HumanML3D dataset consists of 14,616 motions and 44,970 descriptions composed by 5,371 distinct words. The total length of motions amounts to 28.59 hours. The average motion length is 7.1 seconds, while average description length is 12 words.

Paper Reading] TM2T : Stochastic and Tokenized Modeling for the Reciprocal Generation of 3D Human Motions and Texts, by WeiHsinYeh

Creating Authentic Human Motion Synthesis via Diffusion - Metaphysic.ai

kor] HumanML3D dataset. 안녕하세요?, by John H. Kim

HumanML3D Benchmark (Motion Synthesis)

Guo_Generating_Diverse_and_CVPR_2022_supplemental, PDF, Probability Distribution

GitHub - GuyTevet/motion-diffusion-model: The official PyTorch impleme

Generating Virtual On-body Accelerometer Data from Virtual Textual Descriptions for Human Activity Recognition

HumanTOMATO: Text-aligned Whole-body Motion Generation

PDF) MoFusion: A Framework for Denoising-Diffusion-based Motion Synthesis

MoMask: Generative Masked Modeling of 3D Human Motions – arXiv Vanity

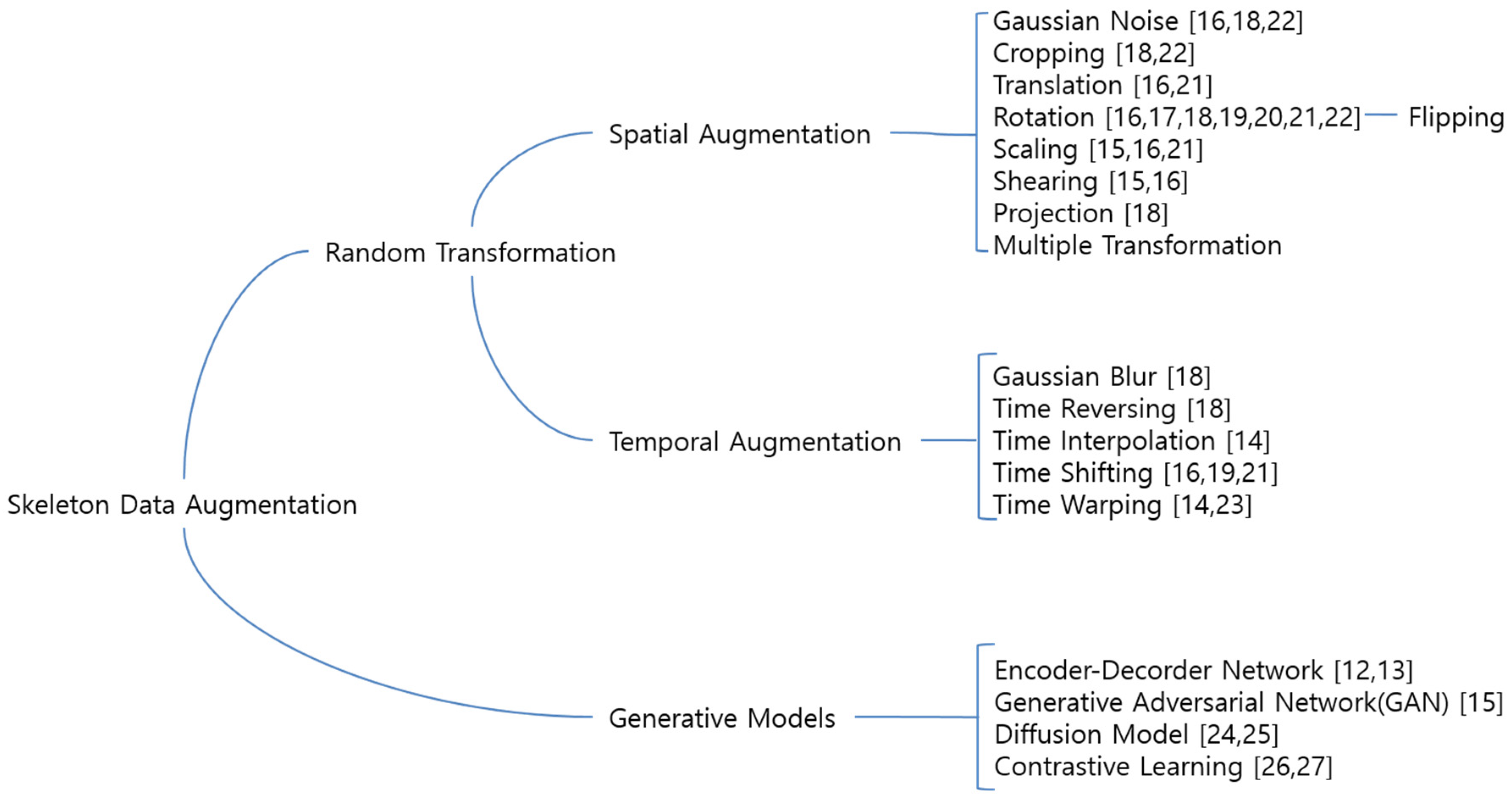

Electronics, Free Full-Text

GitHub - LinghaoChan/UniMoCap: [Open-source Project] UniMoCap: community implementation to unify the text-motion datasets (HumanML3D, KIT-ML, and BABEL) and whole-body motion dataset (Motion-X).

- 38H Bra Size - Buy 38H Bras Online in India

- ROYAL SMEELA Professional Belly Dance Costume for Women, 3pcs Belly Dance Bra and Hip Belt and Long Dance Skirt, Sexy Belly Dancing Outfit, 11 Colors, 4 Sizes (S M L XL)

- Women's Cargo Pants

- Sexy Lingerie Sets for Curvy Women Plus Size Sheer

- Welcome to Unicorn Academy! A Universe where Friendship Reins.