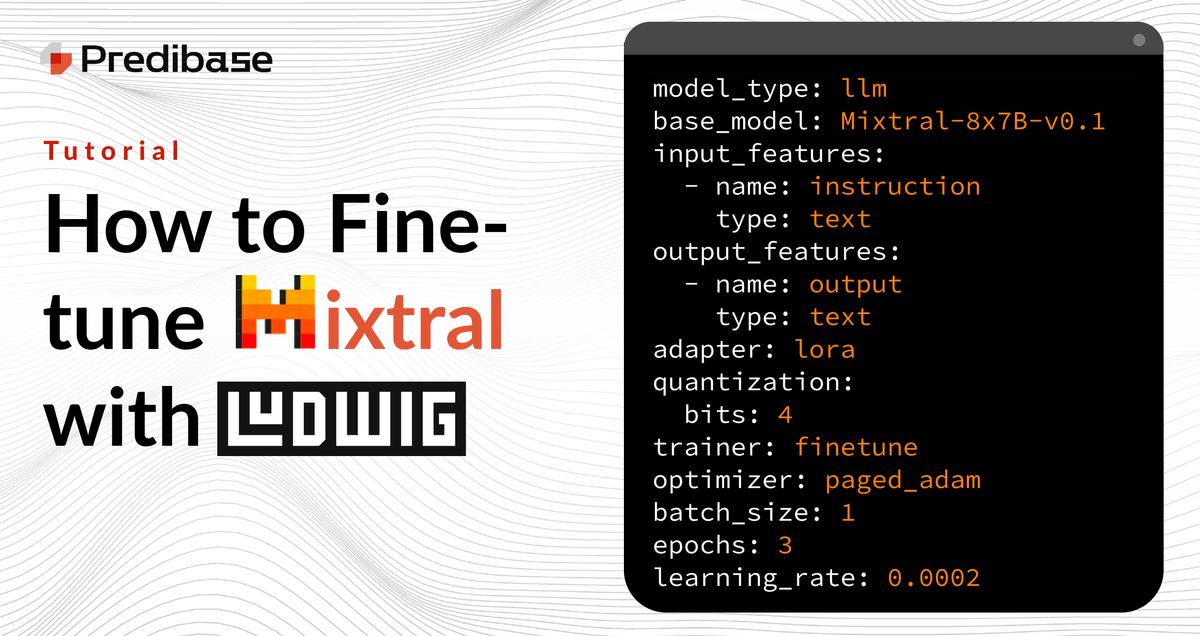

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

By A Mystery Man Writer

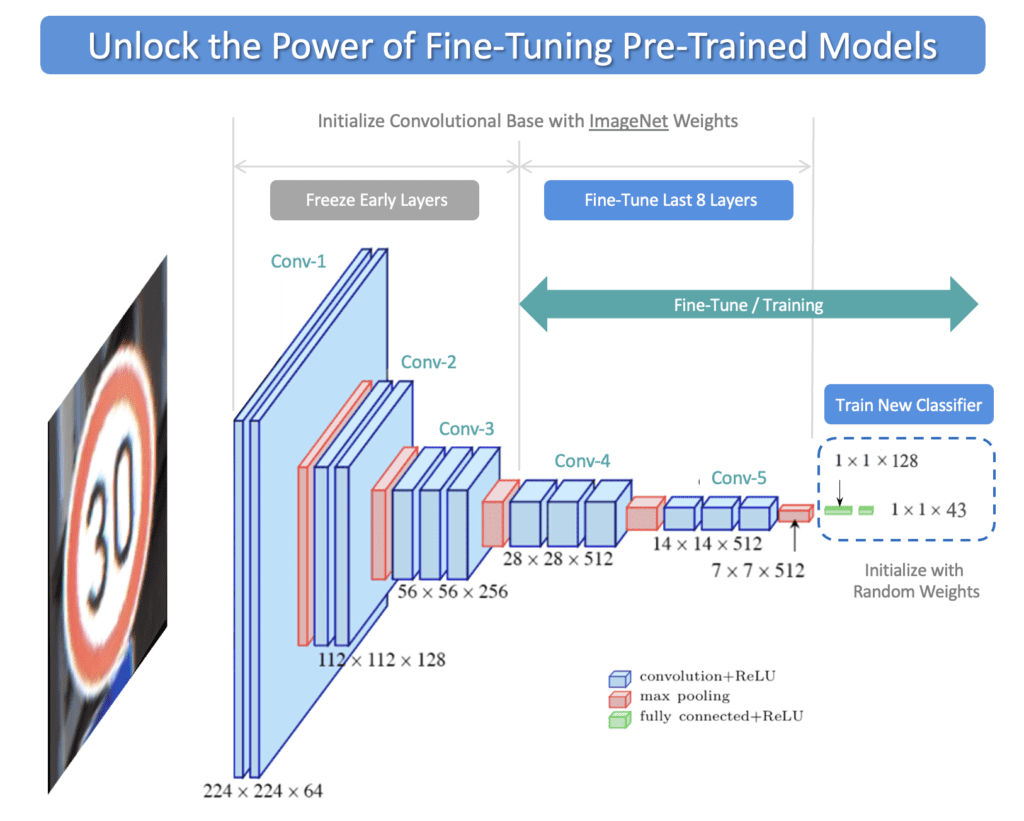

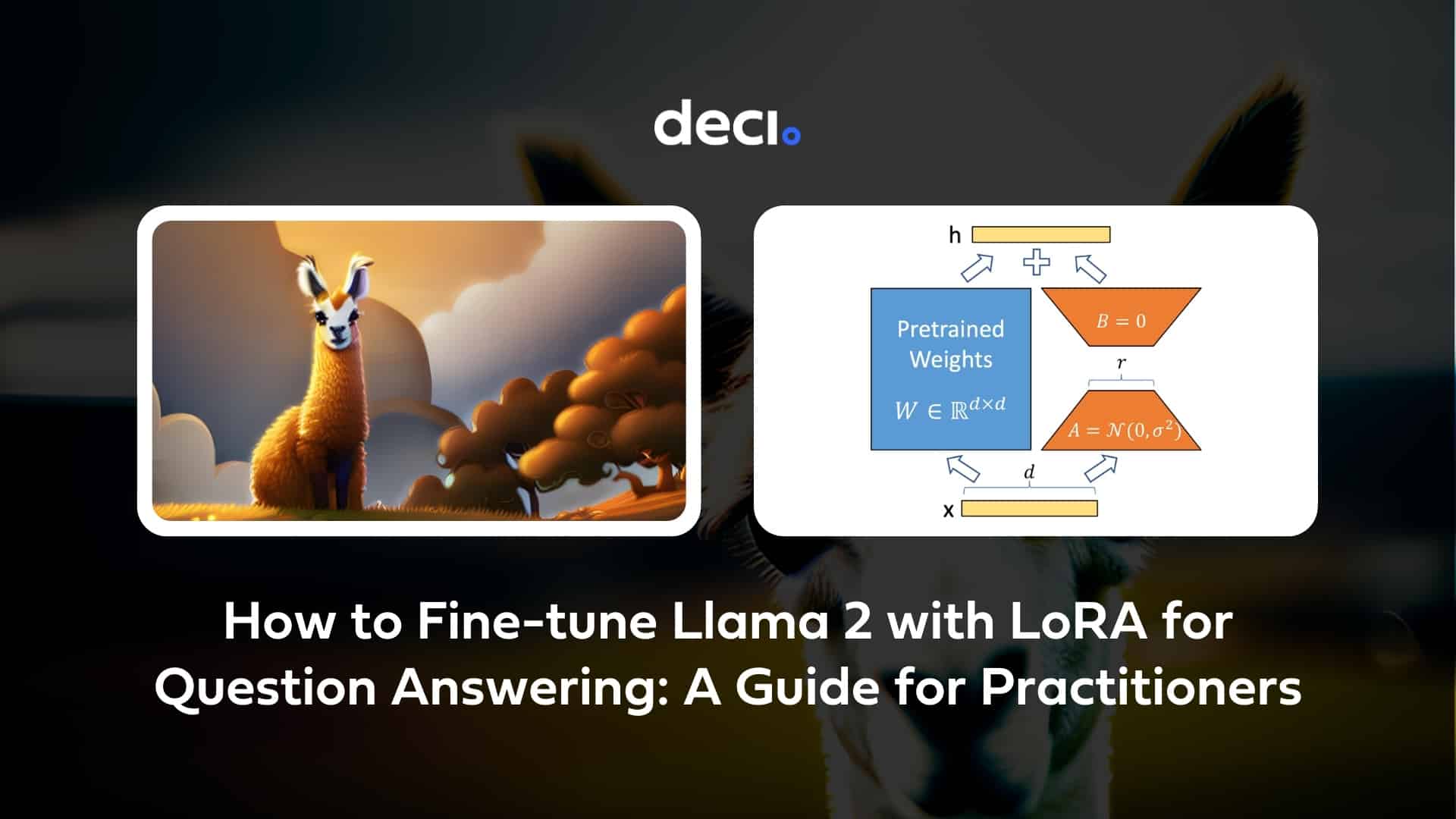

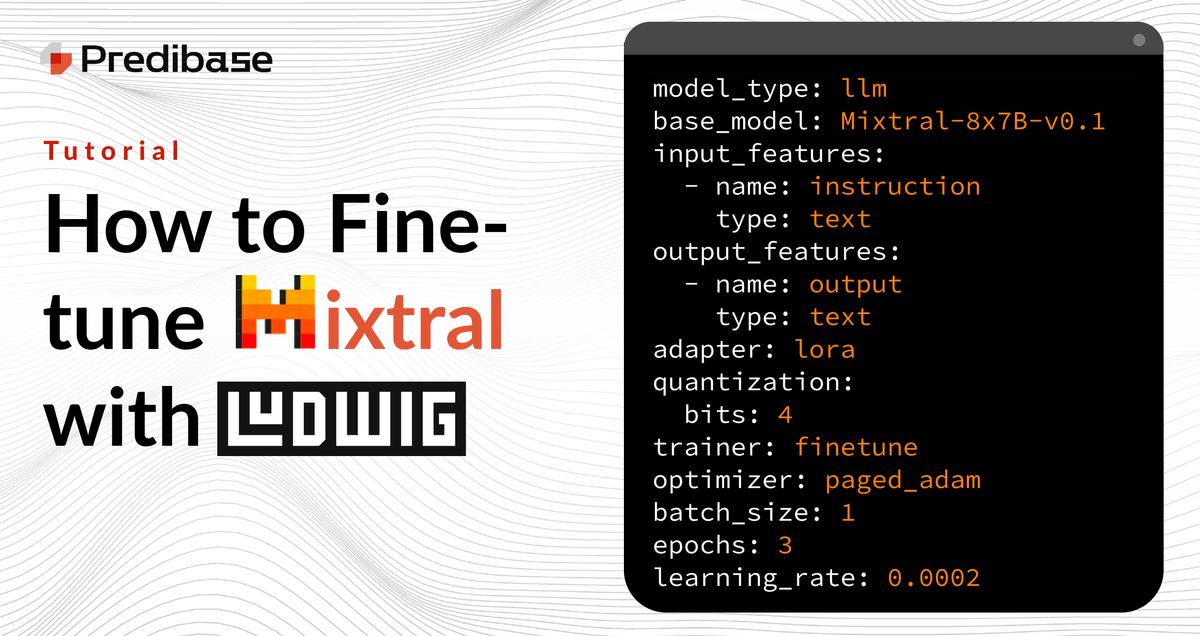

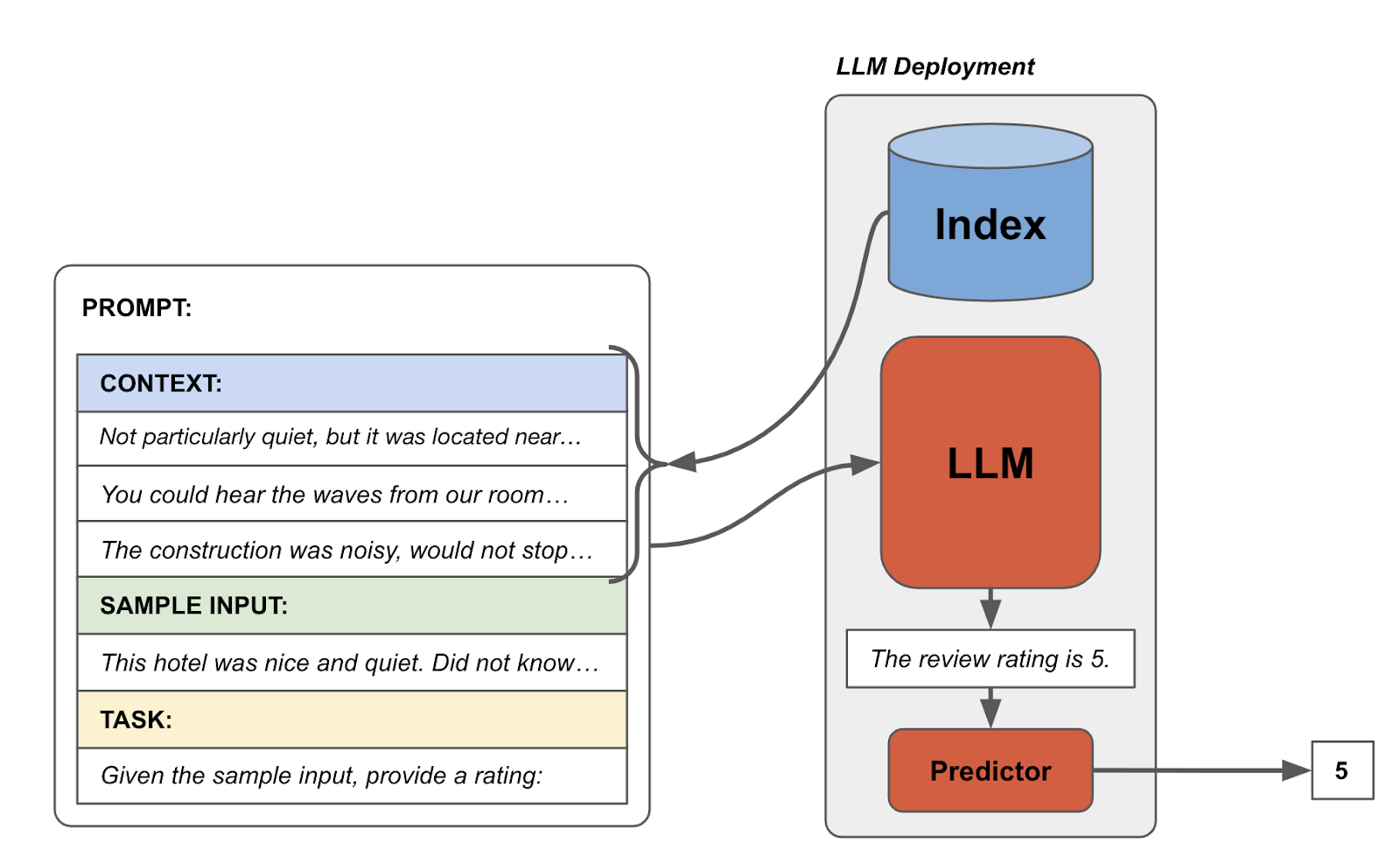

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

Fine Tune mistral-7b-instruct on Predibase with Your Own Data and LoRAX, by Rany ElHousieny, Feb, 2024

.png?width=1000&height=563&name=Predibase%20Cover%20Image%20(2).png)

Live Interactive Demo featuring Predibase

Predibase on LinkedIn: Efficiently Build Custom LLMs on Your Data with Open-source Ludwig

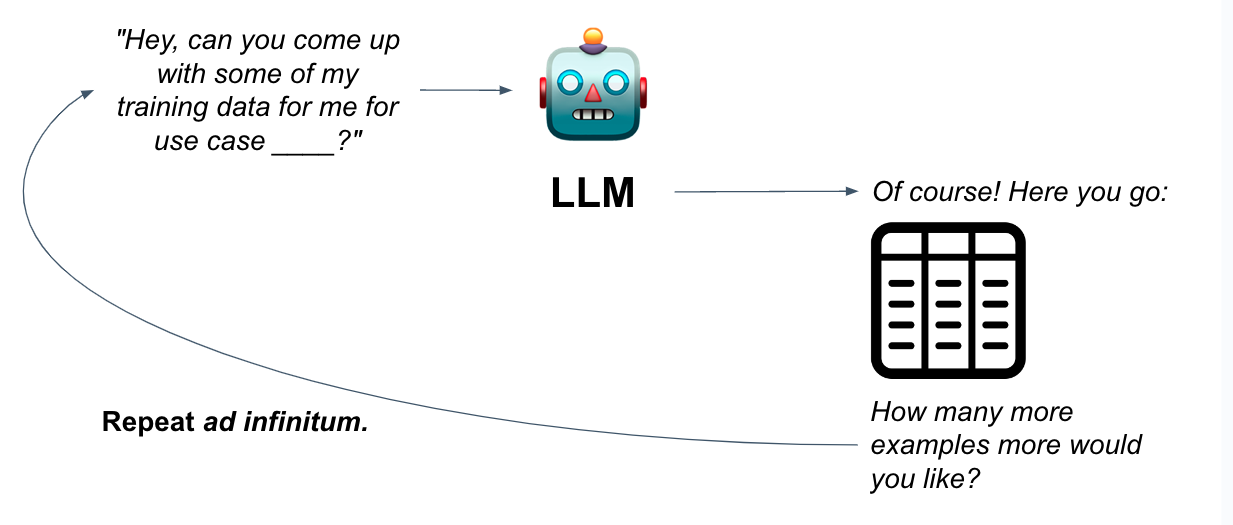

Graduate from OpenAI to Open-Source: 12 best practices for distilling smaller language models from GPT - Predibase - Predibase

How to Fine-tune Mixtral 8x7B MoE on Your Own Dataset

Predibase Platform Demo: Efficient Fine-tuning and Serving

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

Ludwig v0.8: Open-source Toolkit to Build and Fine-tune Custom LLMs on Your Data - Predibase - Predibase

Predibase on LinkedIn: Langchain x Predibase: The easiest way to fine-tune and productionize OSS…

- Assets by Spanx Women's Black Ponte Full Length Shaping Leggings Small

- Chantelle Push-Up BH in schwarz mit abnehmbaren Trägern NEU in Nordrhein-Westfalen - Wachtendonk

- Used j. jill TOPS 3X-22

- Formal Dresses ➤ Milla Dresses - USA, Worldwide delivery

- ONE PIECE Trafalgar Portgas Luffy Men's Underwear Boxer Brief Underpants Anime