How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners

By A Mystery Man Writer

Learn how to fine-tune Llama 2 with LoRA (Low Rank Adaptation) for question answering. This guide will walk you through prerequisites and environment setup, setting up the model and tokenizer, and quantization configuration.

Fine-Tuning Llama-2 LLM on Google Colab: A Step-by-Step Guide

The Ultimate Guide to Fine-Tune LLaMA 2, With LLM Evaluations

New LLM Foundation Models - by Sebastian Raschka, PhD

A Guide to Instruction Tuning of DeciLM using LoRA

Sanat Sharma on LinkedIn: Llama 3 Candidate Paper

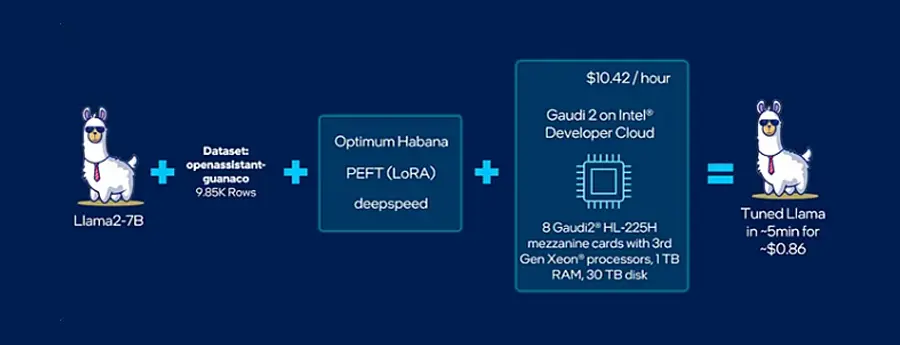

Llama2 Fine-Tuning with Low-Rank Adaptations (LoRA) on Intel

Fine-tune Llama 2 for text generation on SageMaker

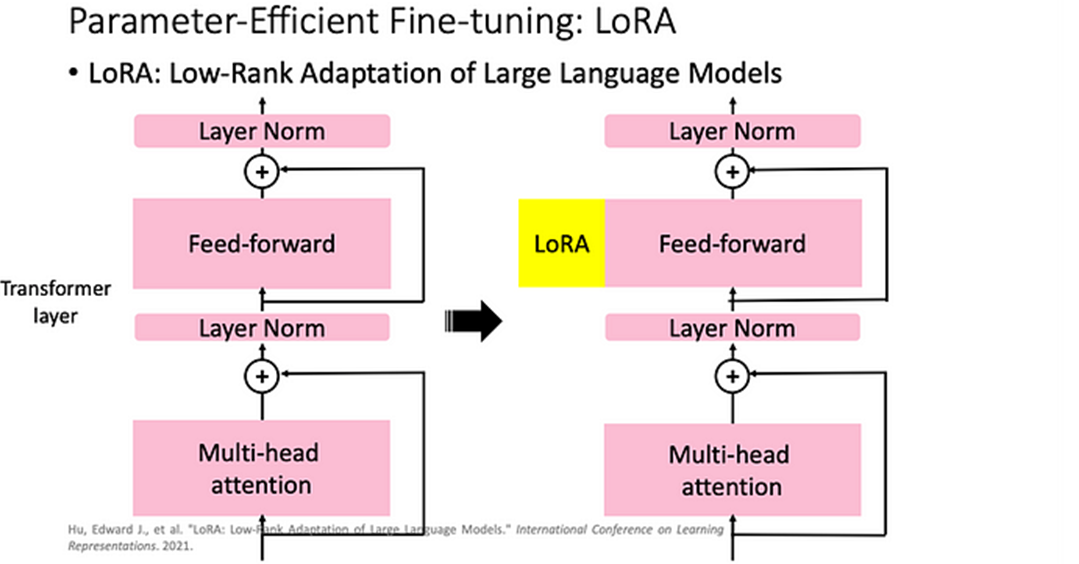

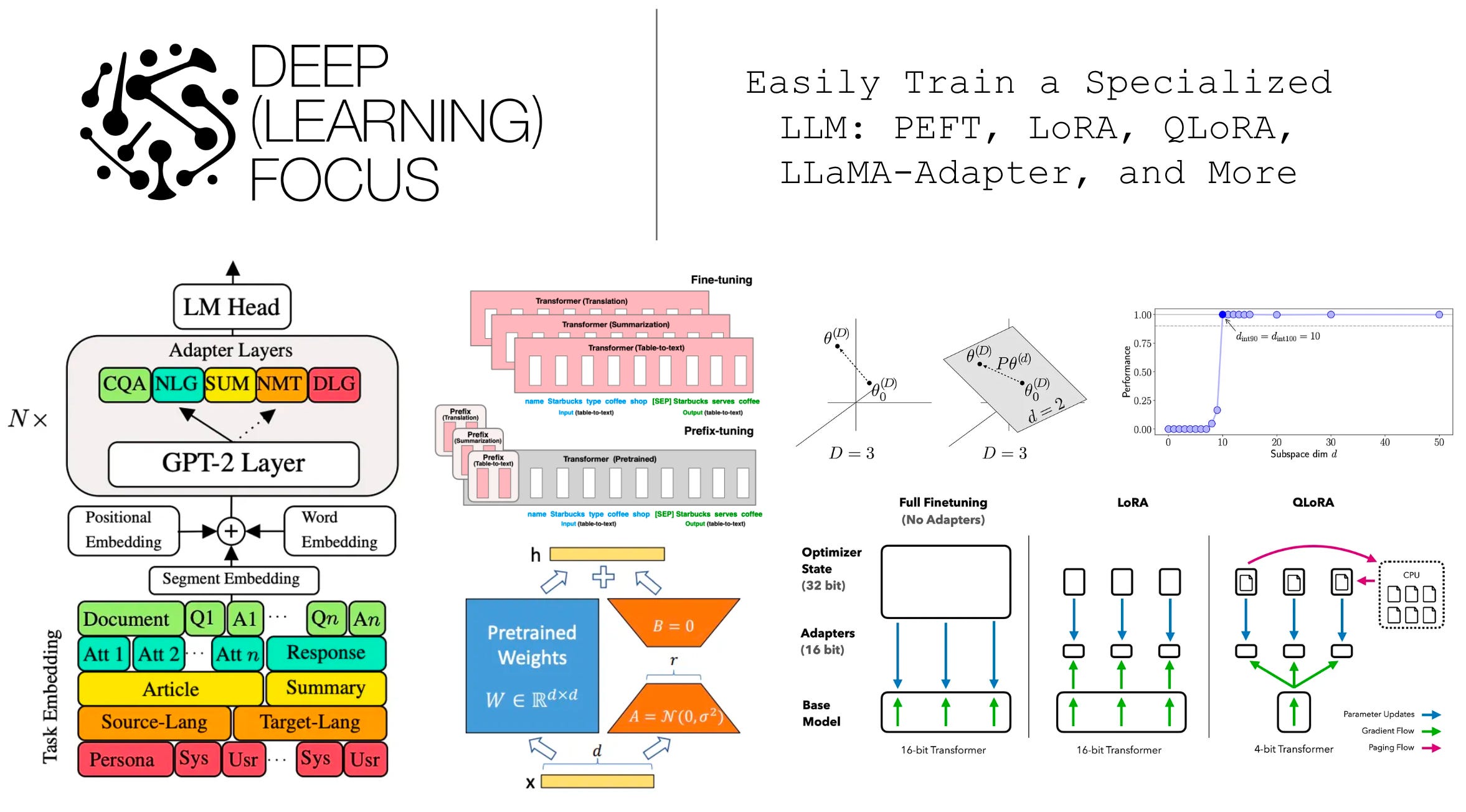

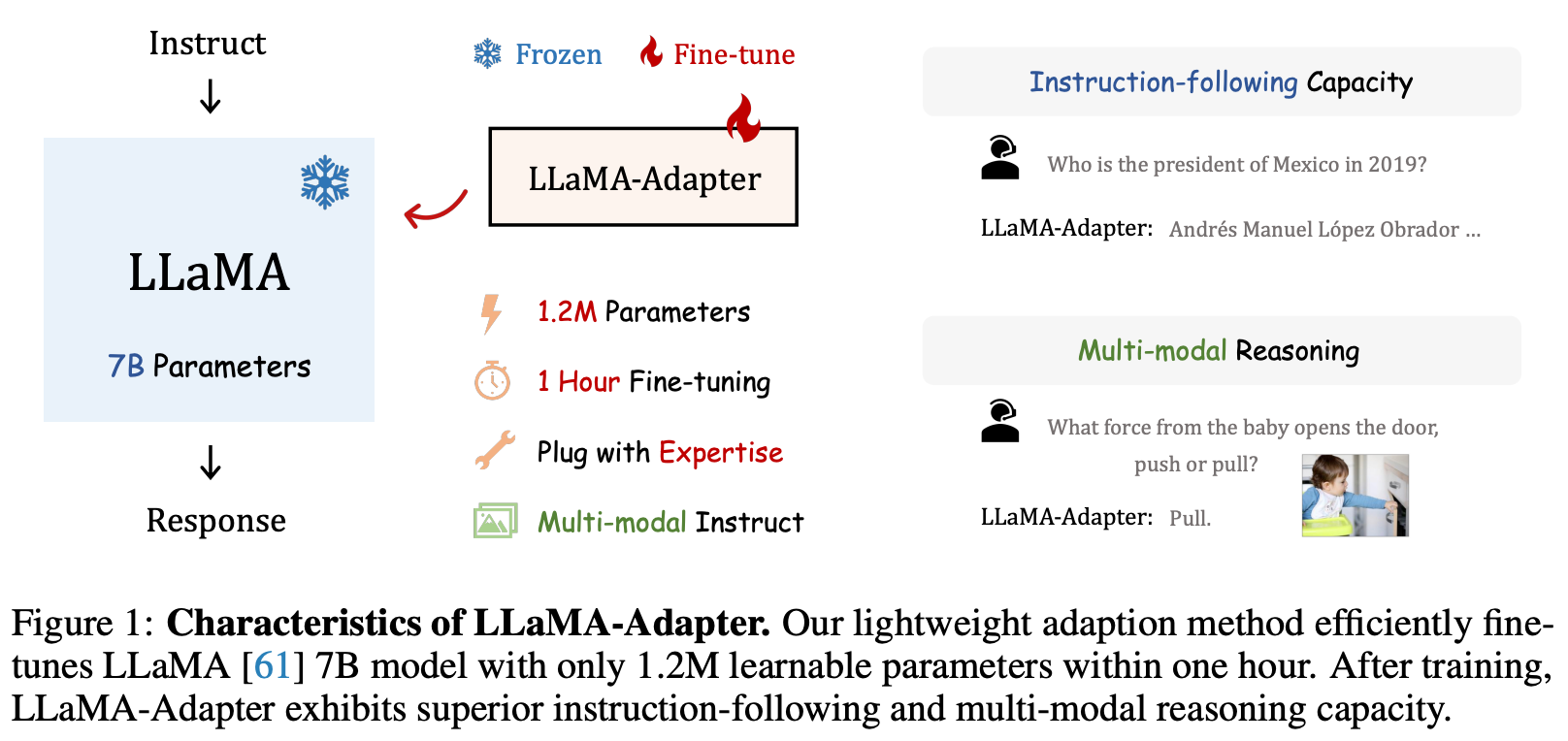

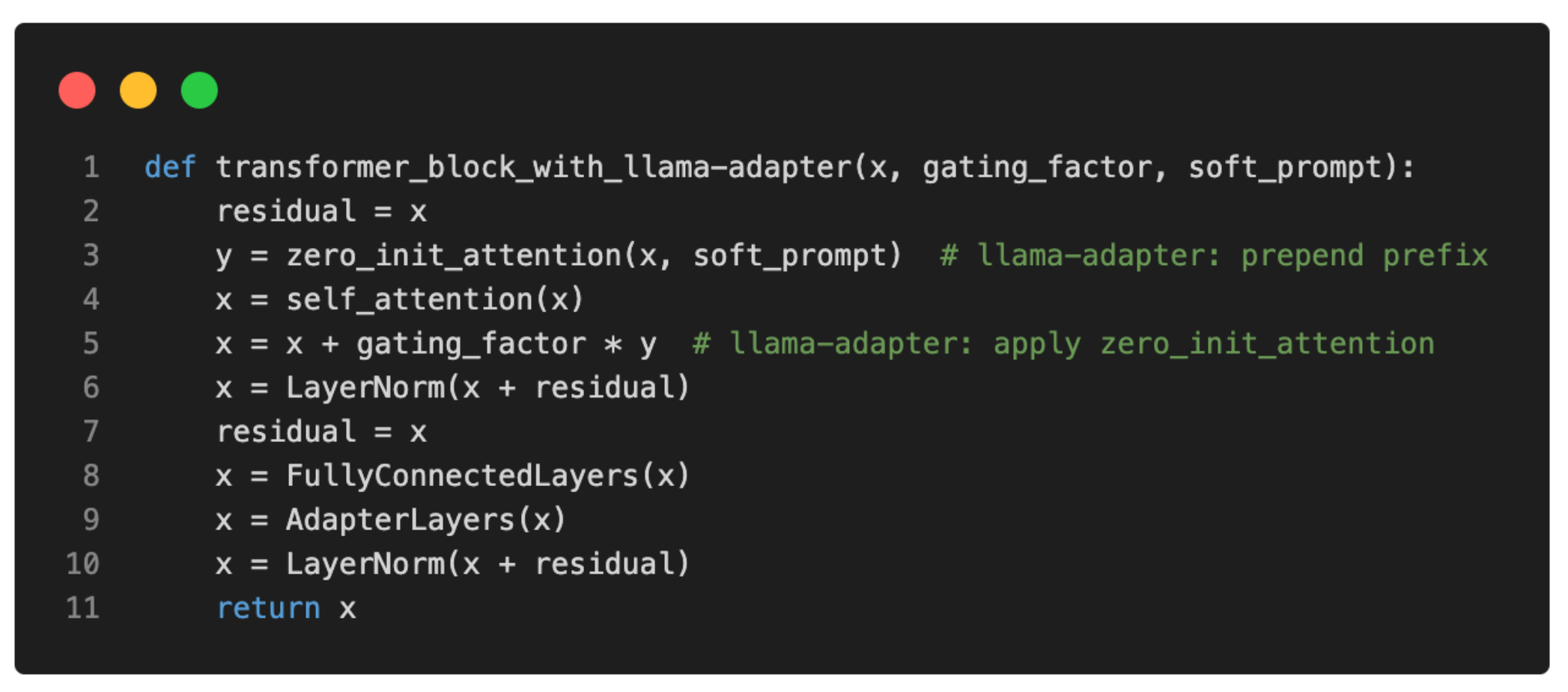

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

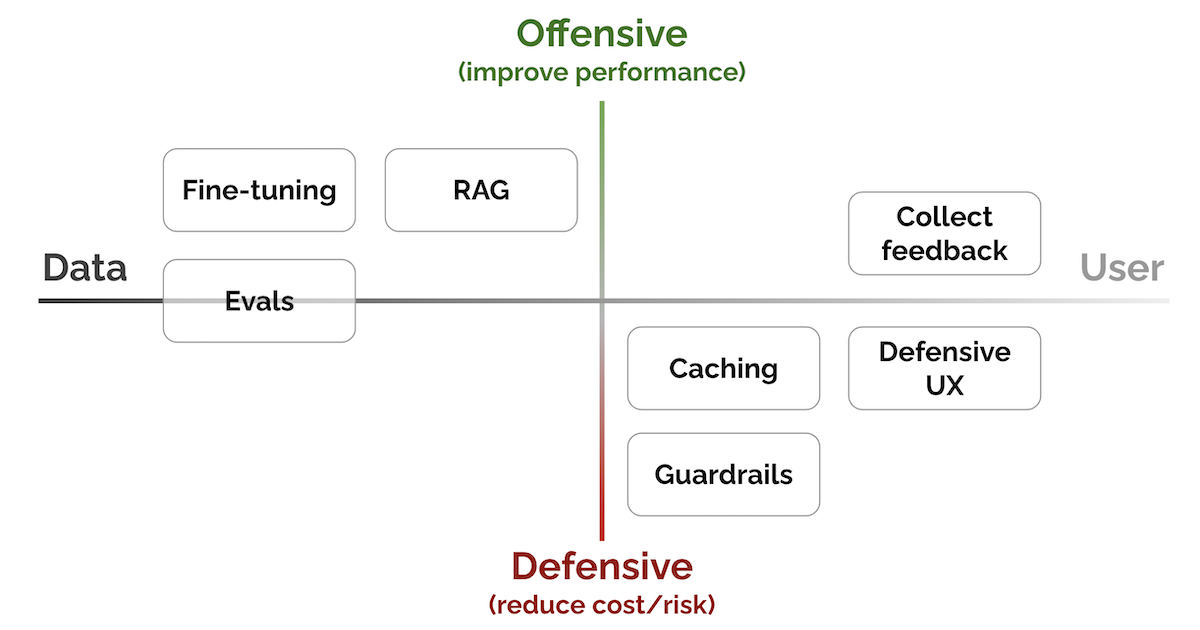

Patterns for Building LLM-based Systems & Products

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Low Rank Adaptation: A Technical deep dive

Understanding Parameter-Efficient Finetuning of Large Language

- Fine-Tuning Large Language Models: Tips and Techniques for Optimal

- RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance

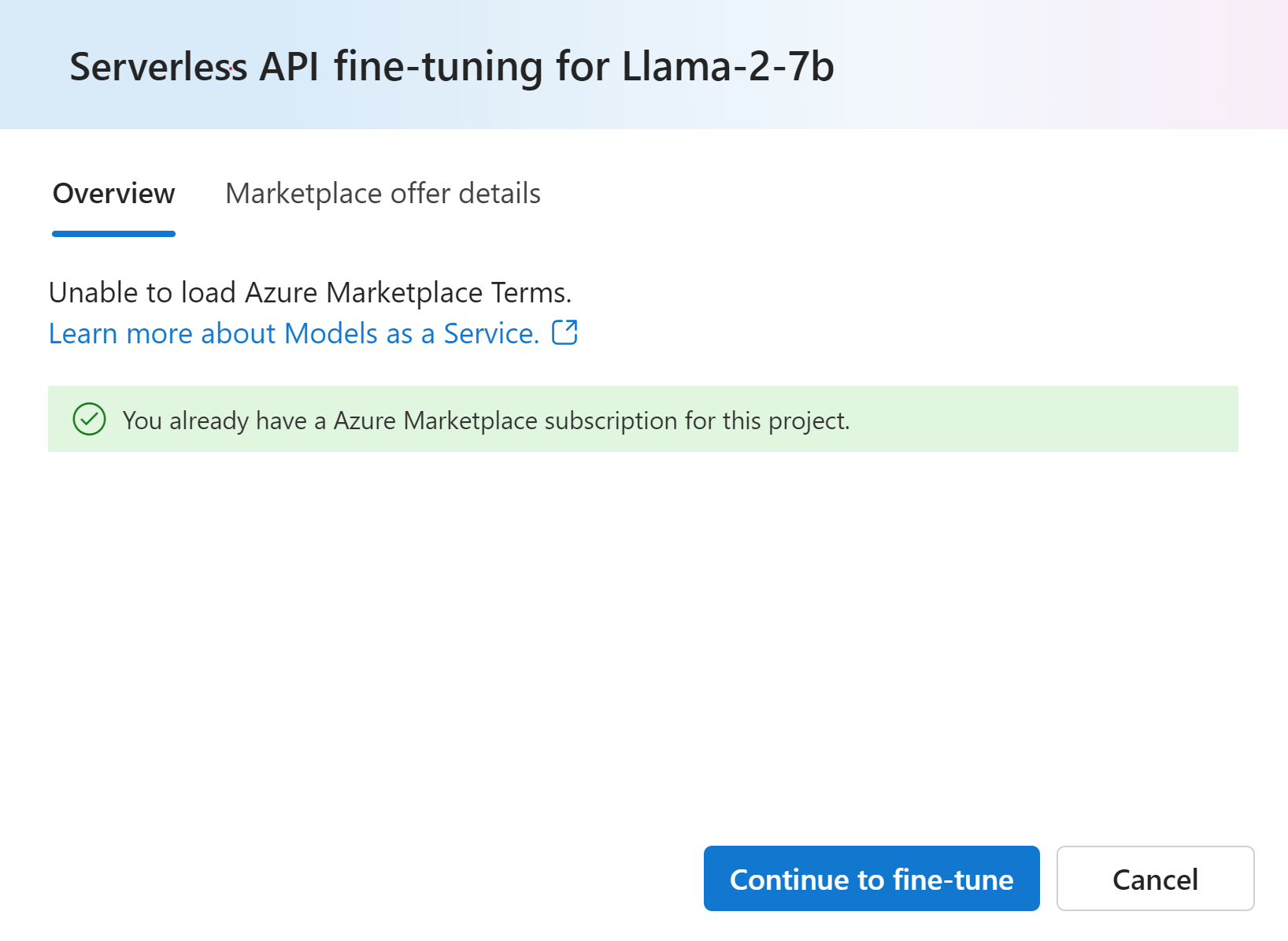

- Fine-tune a Llama 2 model in Azure AI Studio - Azure AI Studio

- How To Fine Tune Chat-GPT (From acquiring data to using model)

- How to Fine Tune GPT3 Beginner's Guide to Building Businesses w/ GPT-3

- PGM KUZ082 korean style men golf pant slim fit quick dry golf

- Felt Furniture Pads X-PROTECTOR - 48 PCS 1 - Felt Pads Floor

- Designer Dresses Always on Sale Shop 90% Off Designer Gowns! – TheDressWarehouse

- Primark says leggings still popular as comfort rules - BBC News

- Buy Intimacy Reversible Sports Bra - Orange Yellow at Rs.320