How to Efficiently Fine-Tune CodeLlama-70B-Instruct with Predibase

By A Mystery Man Writer

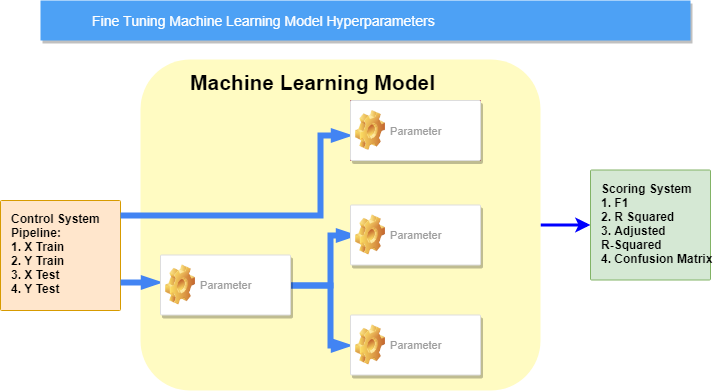

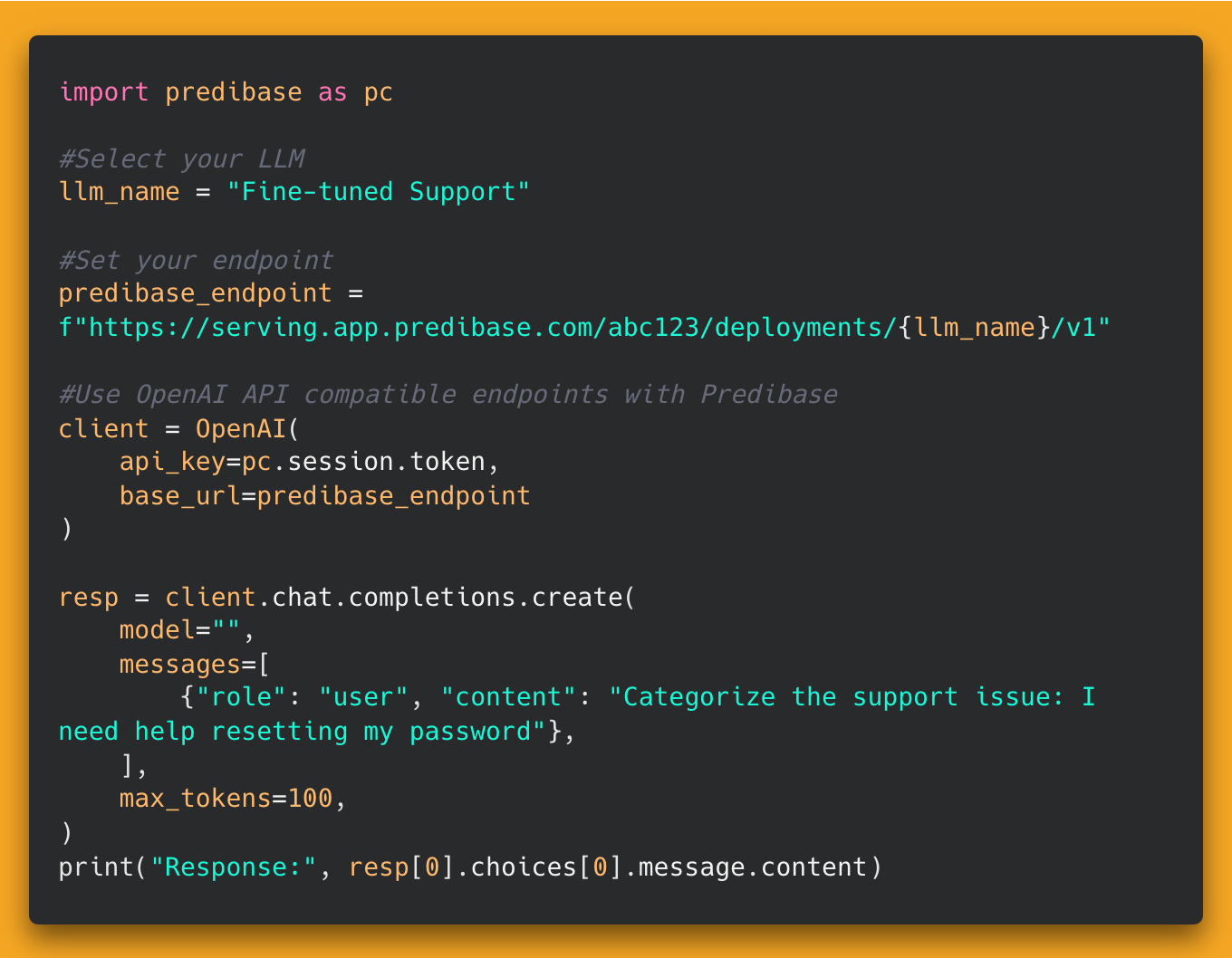

Learn how to reliably and efficiently fine-tune CodeLlama-70B in just a few lines of code with Predibase, the developer platform for fine-tuning and serving open-source LLMs. This short tutorial provides code snippets to help get you started.

How to Efficiently Fine-Tune CodeLlama-70B-Instruct with Predibase - Predibase - Predibase

Geoffrey Angus on LinkedIn: Beyond Chatbots: Use Cases For LLMs in Production - Predibase

Learn how to fine-tun code with CodeLlama-70B 🤔, Predibase posted on the topic

Efficient Fine-Tuning for Llama-v2-7b on a Single GPU

Jeevanandham Venugopal posted on LinkedIn

Piero Molino on LinkedIn: How Uber Predicts Arrival Times - Xinyu Hu and Olcay Cirit, Stanford…

Predibase on LinkedIn: Langchain x Predibase: The easiest way to fine-tune and productionize OSS…

Predibase on LinkedIn: #raysummit #finetune #llms

Alex Sherstinsky on LinkedIn: Thank you very much for the shoutout Yogesh Haribhau Kulkarni -- and for…

Introducing the first purely serverless solution for fine-tuned LLMs - Predibase - Predibase

Investigating the Code Llama Models and Fine Tuned Alternatives