MPT-30B: Raising the bar for open-source foundation models

By A Mystery Man Writer

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

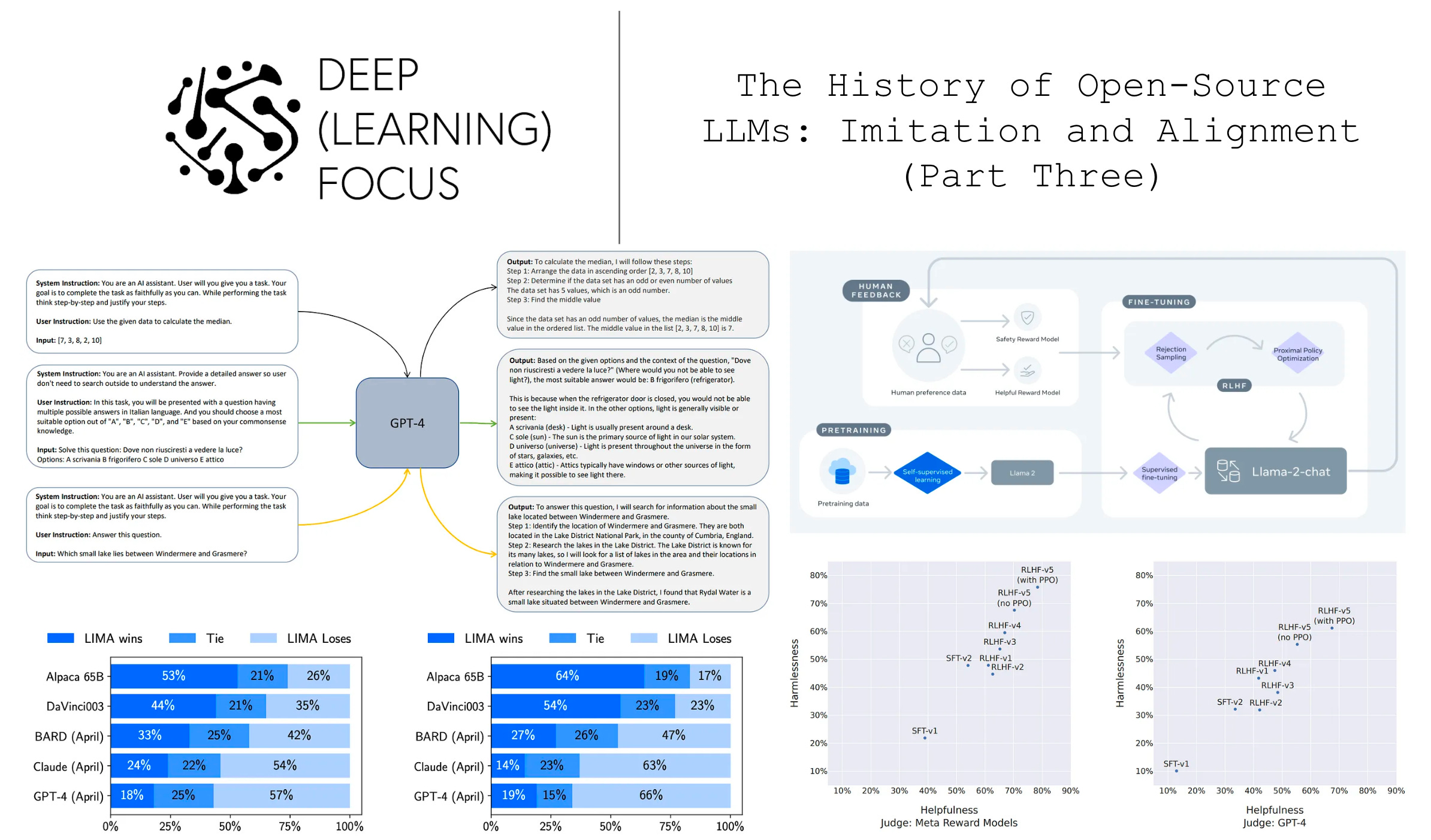

The History of Open-Source LLMs: Imitation and Alignment (Part Three)

llm-foundry/README.md at main · mosaicml/llm-foundry · GitHub

MPT-30B's release: first open source commercial API competing with OpenAI, by BoredGeekSociety

Democratizing AI: MosaicML's Impact on the Open-Source LLM Movement, by Cameron R. Wolfe, Ph.D.

The History of Open-Source LLMs: Better Base Models (Part Two), by Cameron R. Wolfe, Ph.D.

Announcing MPT-7B-8K: 8K Context Length for Document Understanding

MetaDialog: Customer Spotlight

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

open-llms/README.md at main · eugeneyan/open-llms · GitHub

Computational Power and AI - AI Now Institute

GPT-4: 38 Latest AI Tools & News You Shouldn't Miss, by SM Raiyyan

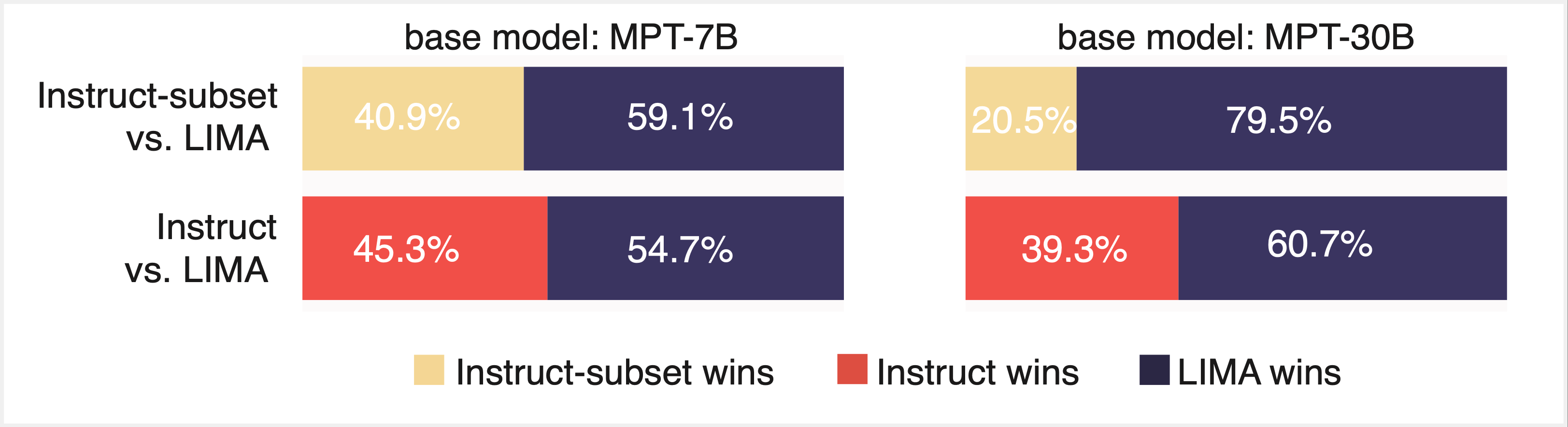

LIMIT: Less Is More for Instruction Tuning

Stardog: Customer Spotlight

- Wholesale Arc Gear Oil Pump 60YHCB-30B ,80YHCB-60B in Chinese - Hubei Dong Runze Special Vehicle Equipment Co., Ltd

- Cloisite 30B Nanoclay Powder, Low Price $40, Highly pure

- 30b Bra For Women

- INAX Crochet White (HAL-30B-RYC-1) ☆ - Marble Trend, Marble, Granite, Travertine, Sintered Stone, Porcelain, Terrazzo, Slabs, Tiles, Toronto, Canada : Marble Trend

- Heil Sound PR 30B Dynamic Supercardioid Studio Microphone (Matte Black)

- Women Front Closure Workout Bra Padded High Impact Comfortable Sport Bra Racerback Adjustable Strap Bra Breathable Wireless Support Bra Blue 3XL

- Medela Easy Expression Bustier - Small

- AutoCraft 15 Wheel Cover: 8-Spoke, Chrome, High Impact Plastic, 4

- Lace Lightly Lined Demi Bra

- 30 Pieces 1 Inch Swim Suit Bra Hooks Replacement Bra Strap